We’ve covered the entire RTX 30 series of cards, and this time around we’ve gotten our hands on the GeForce RTX 3070 Ti. There’s no doubt that the RTX 3090 is the most powerful device on the planet, but they are shockingly hard to find (along with most computer related parts these days). The same could be said for the RTX 3080 and 3080 Ti. Well, I’ve got good news — if you can’t get your hands on either of the 3080 cards, this RTX 3070 Ti might just be what you need.

If you’ve read our other reviews or watched the unboxing videos, you know what to expect here. Retaining the same redesigned shell, the 3070 Ti measures 9.5” in length and 4.4” width, taking up two slots, versus the 3080 which measures 11.2” and 4.4”, respectively. It uses the same proprietary connector, but requires only a single 8-pin connector to the power supply, consuming just 220W versus the 3080’s power-hungry 320W. As such, you’ll only need a 650W PSU in your system to keep this card humming along. The 3070 Ti uses the same flow-through cooling solution that we saw in the 3080, 3080 Ti, and 3090, but we’ll tackle that in a bit. We’ll need to get a little deeper under the hood to see the rest of the differences.

Just like in our 3080 review, I want to cover some jargon to ensure you have a clear picture of the hardware under the hood in the GeForce 3070 Ti. There is one major architecture change from the aforementioned 3070 — it shares the same GDDR6X memory architecture as the RTX 3080 and 3080 Ti. This is a rather large change, meaning it has more in common with its bigger brother than its smaller siblings. In the 3080 we have 10 GB of GDDR6X, and the 3070 Ti is equipped with 8GB of the same GDDR6X memory. GDDR6X uses an amazing new design to introduce four instructions per cycle instead of the usual two we’d find in a typical memory architecture. GDDR6 is what you’ll find in the 2080 Ti, and what you’ll find on every other RTX 30 series cards below the 3070 Ti. To wit, the RTX 3080 has 19 Gbps of memory throughput to the 3070’s 14 Gbps. Surprisingly, the RTX 3070 Ti manages the same memory throughput of the RTX 3080 — 19Gbps.

There is one hitch with the 3070 Ti — the amount of memory on the card. The 3080 felt light at 10GB, but the 3070 Ti cuts that back to 8GB. Stacking up features on a game can use quite a bit of RAM, caching the level in VRAM. Higher refresh rates combined with higher resolution means pushing more pixels per second, with 4K literally pushing into the billions. The higher the resolution, the more VRAM you’ll need. That’s not to say that running out of memory is going to cause you a problem, because that’s not how any of this works, but it could mean another trip back to the storage through the backplane, and that can add a little bit of extra cycle time. It’s also worth noting that memory reservation and memory allocation are two different things. Just because a game says it’s using 9GB of VRAM doesn’t mean it will actually do so — just that it has the potential to do so. Thankfully, the benchmarks suggest we aren’t hitting that point yet, but it’s worth noting if you intend to play at higher refresh and resolution.

The largest difference between the 3070 and 3070 Ti beyond the memory architecture is the number of Cuda cores. The 3070 has 5888 cores, whereas the 3070 Ti ships with 6144. Additionally, the 3070 has 184 Tensor cores versus the 3070 Ti’s 192. That said, let’s dig into the rest of the tech before we draw any conclusions.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources intersecting with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity — with RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The Turing architecture cards (the 20X0 series) were the first implementations of this dedicated RT core technology. The 2080 Ti had 72 RT cores, delivering 29.9 Teraflops of throughput, whereas the RTX 3080 has 68 2nd-gen RT cores with 2x the throughput of the Turing-based cards, delivering 58 Teraflops of RTX power. The 3070 sports 46 RT cores, with only a small nudge for the 3070 Ti at 48 cores.

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture — the Tensor Core. This relatively new technology from NVIDIA had seen wider use in high performance supercomputing or data centers before finally arriving on consumer-focused cards in the latter and more powerful 20X0 series cards. Now, with the RTX 30×0 series of cards we have the third generation of these processors. The 2080 Ti had 240 second-gen cores, the 3080 has 272 third-gen Tensor cores, the 3070 comes with 184 of them, and the 3070 Ti lands square in the middle with 192. Don’t fall into the trap that more is better though — the third generation of tensor cores are double the speed of their predecessor. That’s great and all — but what do they do?

Tensor cores are used for AI-driven learning, and we see this more directly applied to gaming via DLSS, or Deep Learning Super Sampling. More than marketing buzzwords, DLSS can take a few frames, analyze them, and then generate a “perfect frame” by interpreting the results using AI, with help from supercomputers back at NVIDIA HQ. The second pass through the process uses what it learned about aliasing in the first pass and then “fills in” what it believes to be more accurate pixels, resulting in a cleaner image that can be rendered even faster. Amazingly, the results can actually be cleaner than the original image, especially at lower resolutions, and having less to process means more frames can be rendered using the power saved. It’s literally free frames. DLSS 3.0 is still swirling in the wind, but very soon we may see this applied more broadly than it is today. We’ll have to keep our eyes peeled for that one, but when it does release, these Tensor cores are the components to do the work. That’s all fancy, but wouldn’t you rather see it in action? Here’s a quick snippet from Control that does exactly that.

DLSS 2.0 was introduced in March of 2020, and it took the principals of DLSS and set out to resolve the complaints users and developers had, while improving the speed. To that end, they reengineered the Tensor core pipeline, effectively doubling the speed while still maintaining the image quality of the original, or even sharpening it to the point where it looks better than the source! For the user community, NVIDIA exposed the controls to DLSS, providing three modes to choose from — Performance for maximum framerate, Balanced, and Quality which looks to deliver the best quality final resultant image. Developers saw the biggest boon with DLSS 2.0 as they were given a universal AI training network. Instead of having to train each game and each frame, DLSS 2.0 uses a library of non-game-specific parameters to improve graphics and performance, meaning that the technology could be applied to any game should the developer choose to do so. Game development cycles being what they are, and with the tech only hitting the street earlier this year, it’s likely we’ll see more use of DLSS 2.0 during the holiday 2021 blitz, and even more after the turn of the year.

Frametime vs. Framerate:

It’s important to understand that these two terms are not in any way interchangeable. Framerate tells you how many frames are rendered each second, and Frametime tells you how long it took to render each frame. While there is a great deal of focus on framerate and the resolution at which it’s rendered, frametime should likely receive equal if not greater attention. When frames take too long to render they can be dropped or desync, wreaking all sorts of havoc including stuttering. If frame 1 takes 17ms, but frame 2 takes 240ms, that’s going to make for a jittery result. Realize that both are important and don’t become myopically focused on just framerate as it only tells half the story – even if a device is capable of delivering 144fps, if it does so in an uneven fashion, you’ll see a choppy output.

What is RTX IO?

Right now, whether it’s on PC or consoles, there is a flow of data that is largely inefficient and our games suffer for it. Storage platforms deliver the goods across the PCI bus, to the CPU and into system memory where they are decompressed. Those decompressed textures are then passed back across the PCI bus to the GPU which then hands it off to the GPU memory. Once that’s done, it is then passed to your eyeballs via your monitor. Microsoft has a new storage API called DirectStorage that allows NVMe SSDs to bypass this process. Combined with NVIDIA RTX IO, the compressed textures instead go from the high-speed storage across the PCIe bus and directly to the GPU. Assets are then decompressed by the far-faster GPU and delivered immediately to your waiting monitor. Cutting out this back-and-forth business frees up power that could be used elsewhere — NVIDIA estimates up to a 100X improvement. When developers talk about being able to reduce install sizes, that comes directly from this technology. So, what’s the catch?

NVIDIA RTX IO is an absolutely phenomenal bit of technology, but it’s so new that nobody has it ready for primetime. As a result, I can only tell you that it’s coming and talk about how awesome this concept and technology is; I can’t test it for you. That said, as storage platforms shift towards incredibly high speed drives that unfortunately have very low storage capacity, you can bet we’ll see this come to life and quickly. Stay tuned on this — it could be a huge win for gamers everywhere.

NVIDIA Broadcast:

There is an added bonus that comes with the added power of the Tensor cores. While the AI cores do handle DLSS, they also deliver some additional smoothing in an app NVIDIA is calling “NVIDIA Broadcast”. This app can improve the output of your microphone, speakers, and camera through applying AI learning in much the same way that we see it in the examples above. Static or background noise in your audio, artifacting when you are streaming with a green screen (or without one as Broadcast can simply apply a virtual background), distracting noise from another person’s audio, and even perform a bit of head-tracking to keep you in frame if you are the type that moves around a lot. Not just a gaming-focused application, this works for any video conferencing, so feel free to Zoom away while we are all stuck inside.

Inside the application are three tabs – microphone, speakers, and camera. Microphone is supposed to let you remove background noise from your audio. I use a mechanical keyboard and I’ve been told more than once that it’s loud. Well, even with this enabled, it’s still loud — my Seiren Elite doesn’t miss a single sound.

The second tab is speakers, which is supposed to reduce the amount of noise coming from other sources. I found this to be fairly effective, removing obnoxious typing noises from others — hopefully I can do the same for them one day.

The final tab is where the magic happens. Under the Camera tab you can blur your background, replace it with a video or image, simply delete the background, or “auto-frame”. Call software like Zoom and Skype can do this as well, but even in this early stage I can say it doesn’t do it this well. Better still, getting it pushed into OBS was as simple as selecting a device, selecting “Camera NVIDIA Broadcast” and it was done. It doesn’t get any easier than this.

The software has fully launched at this point, delivering a lot of value for the low price of free! In practice I saw a marginal (~2%) reduction in framerate when recording with OBS — a pittance when games like Overwatch and Death Stranding are punching above 150fps, and it works with fairly terrible lighting like I have in my office. A properly lit room will look miles better.

Gaming Benchmarks:

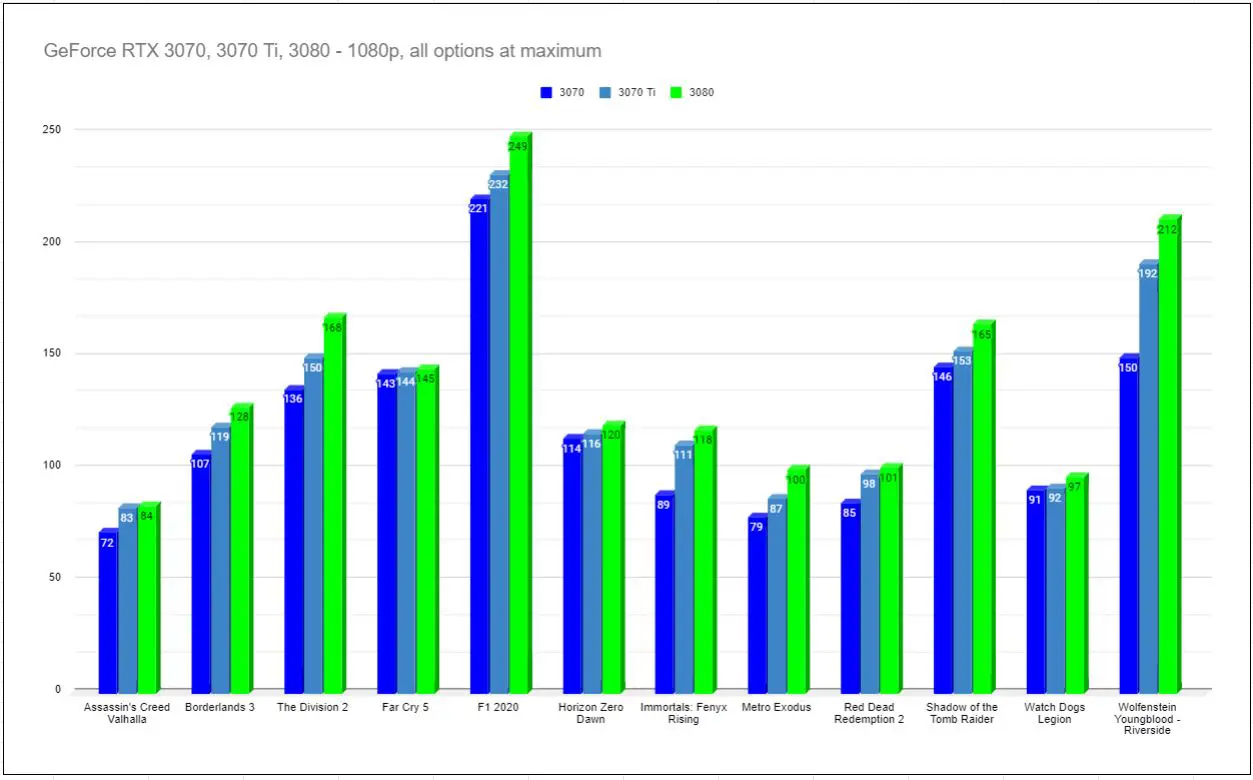

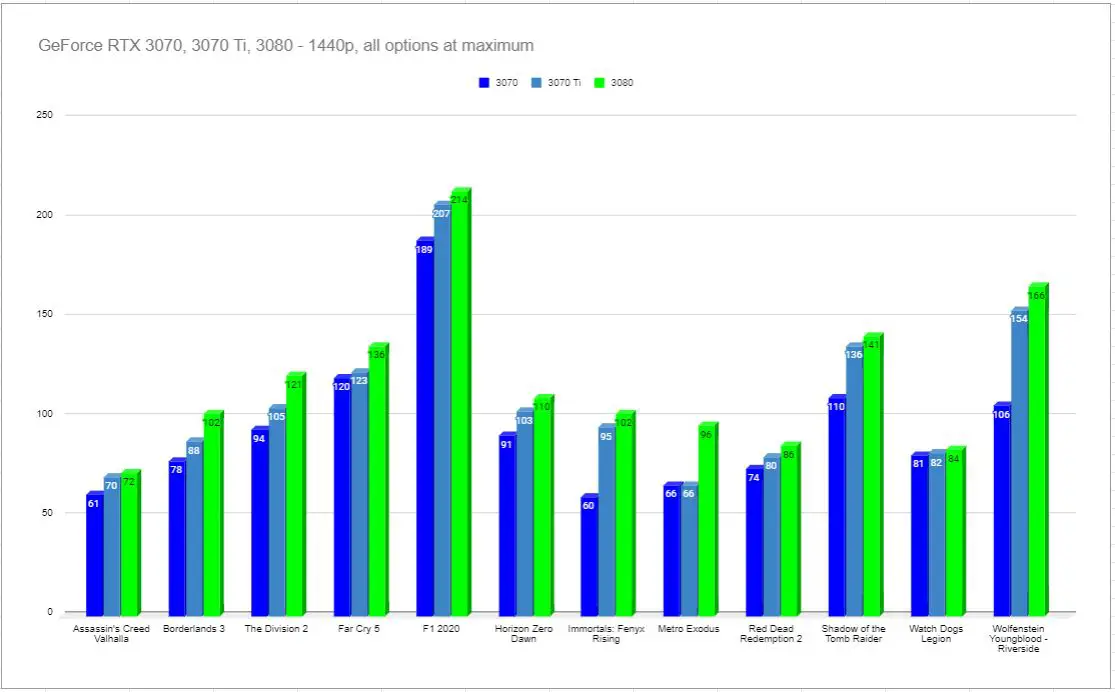

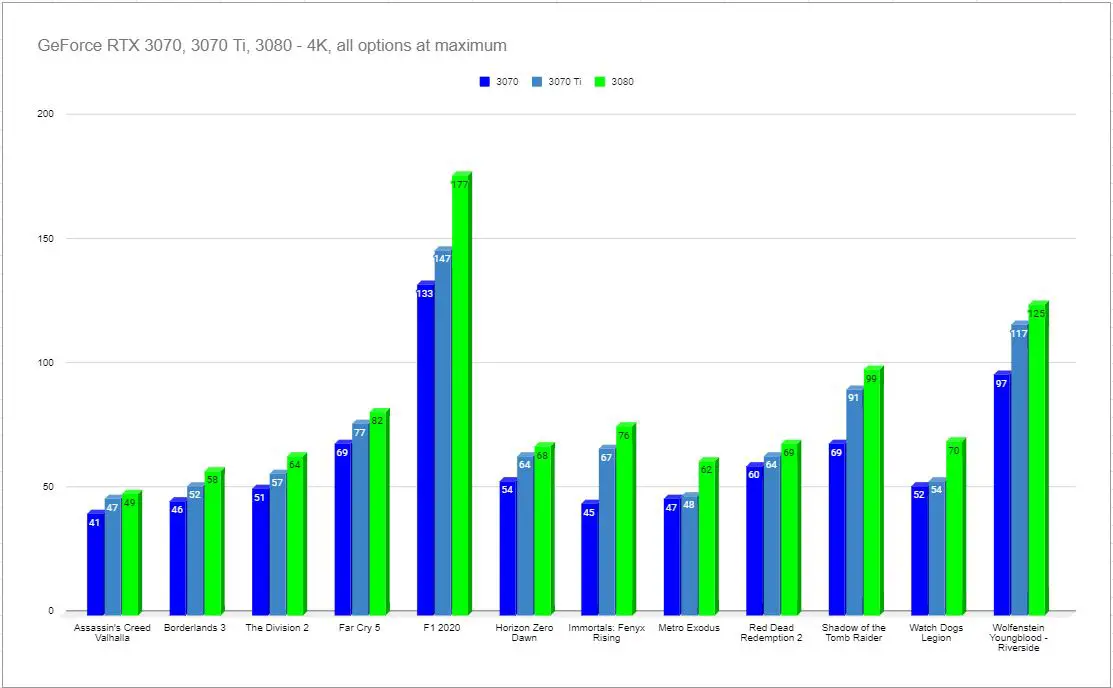

As we stare down the barrel of E3 2021 this week, it’s likely we’ll see a whole new benchmark-worthy goodies on the horizon. For now, we have a stable of older, newer, and bleeding edge games that showcase different challenges for PC gamers. We have a few that are CPU bound, others that struggle with higher resolutions, and still more that take full advantage of RTX lighting technologies like ray traced lighting, AI, DLSS, and more. All of that said, realize that I’m running pre-release drivers. These numbers will likely improve and spread out as game releases and NVIDIA’s drivers mature.

Analysis:

As we expected, the RTX 3070 Ti falls between the 3070 and 3080, but what wasn’t expected is just how close it leans towards the 3080 side. Most often, we see numbers that are closer or almost matching the power and performance of the RTX 3080. I think this speaks volumes for the improvements brought by GDDR6X over the previous memory architecture. We also see games that take advantage of new lighting like RTX instead of performing complex calculations for lighting and shadows achieving a sizable boost over their contemporaries lacking that tech. Games that are CPU bound like Assassin’s Creed Odyssey and Immortals: Fenyx Rising are starting to see improvements with patches and time, but throwing more hardware at it is certainly helping. The addition of tech like DLSS would make for a massive improvement at nearly zero cost, so let’s hope that we see that folded directly into the games on the horizon.

Much like its non-Ti brother, the differences between 4K and 1440p is where the RTX 3070 Ti lives. Obviously every game has its own specs to juggle, but the 3070 Ti is able to deliver 4K at or near 60. If you are like me, though, you’d prefer to run 1440p at a higher rate instead. All of the benchmarks were run at the highest possible settings, including the notoriously rough Red Dead Redemption 2. Despite throwing the kitchen sink at this card, it’s still able to handle Rockstar’s flagship title at 4K and 60fps.

I am absolutely stunned at the pricing that NVIDIA has attached to the RTX 3070 Ti. The card is delivering and in some cases exceeding the power of the RTX 3080, and the green team has slapped a $599 sticker on the box — a $100 premium over the 3070, but with a whole lot more oomph under the hood.

It’s important to note that many of the games on our current benchmark list are CPU-bound. The interchange between the CPU, memory, GPU, and storage medium create several bottlenecks that can interfere with your framerate. If your graphics card can ingest gigs of information every second, but your crummy mechanical hard drive struggles to hit 133 MB/s, you’ve got a problem. If you are using high-speed Gen3 or 4 m.2 SSD storage that drive can hit 3 or even 4 GB/s, but if your older processor isn’t capable of processing it, it’s not going to be able to fill your GPU either. Supposing you’ve got a shiny new 11th Gen Intel CPU, you may be surprised that it also may not be fast enough for what’s under the hood of this RTX 3070 Ti, and we see some of that phenomenon in the benchmarks above. NVIDIA is once again ahead of the power curve, and processors, memory, and even the PCI bus need to catch up before all this power gets fully utilized, much less taxed.

Cooling and Noise:

It’s one thing to deliver blisteringly fast frame rates and eye-popping resolutions, but if you do it while also blowing out my eardrums with a high pitched whine as your Harrier Jet-esque fans spin up to cool, we’ve got a problem. Thankfully NVIDIA realized this, as well as the need to cool a staggering 28 billion transistors (the 2080 Ti had 18.9 billion), and they redesigned the 30X0 series of cards to match. Like the 3080 Ti, the 3070 Ti has a solid aluminum body construction with a new airflow system that displaces heat more efficiently, and somehow does it while being 10dB quieter than its predecessor. I’d normally point to that being a marketing claim, but I measured it myself.

Normal airflow through a case with a standard setup starts with air intake at the front, pushing it over the hard drives, and hopefully out the back. I can tell you that the case I picked up from Coolermaster was incorrectly assembled on arrival with the 200mm fan on top blowing air back into the case, so it’s always best to check the arrows on the side to make sure your case follows this path. The 2080 Ti’s design was that of a solid board that ran the length of the card with fans on the bottom pushing heat away. Unfortunately this requires that it circulates back into the path of the air flow, having to billow back up and then travel out the top and rear case fans.

The RTX 30X0 card’s airflow system is a thing of beauty. Since the card has been shrunk effectively in half thanks to the 8nm manufacturing process, this left a lot of real estate for a larger cooling block and fins, as well as a fan system to match. The fan on the top of the card (once it is mounted in the case) instead draws air up from the bottom, going through the hybrid vapor chamber heat pipe (as there is no longer a long circuit board to obstruct the airflow), and pushes it directly into the path of the normal case air path. The second fan, located on the bottom of the card and closest to the mounting bracket at the back of the case, draws air in as well, but instead of passing it through the card, it pushes the excess heat out of the rear of the card through a dedicated heat pipe vent. While we are using the 3080 to illustrate, and the case of 3070 Ti is slightly smaller, the concept operates precisely the same:

You can see the results while running benchmarks. The RTX 3070 Ti, just like the 3080 Ti and other 30-series cards, never pushed above 79 C, no matter how hard I pushed it or at what resolution I ran it. In fact, it stayed in the low to mid 70s nearly all the time, and at an amazing 35 C when idle. More than once I’ve peeked into my case and saw that the fans for the card aren’t even spinning — this is one amazing piece of engineering.

Impossible Value

With the PlayStation 5 and Xbox Series X launch behind us, 4K gaming has finally arrived. Games like Ratchet & Clank: Rift Apart (our review) deliver Pixar-level graphics and do so at 4K/30, or 4K/60 with reduced fidelity. While it’s possible for both new platforms to deliver 4K/120, it’s still very rare to see. Upgrades to already-released titles may pad out the short list of 4K/60 or 4K/120 list some, but there’s an underlying issue — HDMI 2.1, your TV, and your receiver. Much like the issue with processors, RAM, and motherboards, all of your components need to match to maximize what your GPU can produce. On a console all of that is self-contained and set in stone, but the problem remains. While both consoles provide support for HDMI 2.1, the newest standard which is capable of delivering up to 8K output, your TV and receiver also have to support these — and very few do. In reality, you likely have a 4K TV which supports 60Hz, if even that. This brings me to the GeForce RTX 30X0 series of cards.

NVIDIA’s newest series of cards need hardware to match. They support HDMI 2.1, 8K outputs, high refresh rate and high resolution monitors, and they do it while having RTX and DLSS enabled. Seeing DOOM Eternal in 8K with RTX and DLSS is something to behold, but most of us are a long way away from realizing that at home. What we can do, however, is enjoy 4K gaming at 60+ (often WAY past 60) without compromise. Sure, the newest consoles will advertise 4K/60 or even 4K/120, but can they do it with all the sliders to the right? I sincerely doubt it.

As the list of games supporting RTX technologies clips past 130, and with support being baked directly into Unity and Unreal Engines moving forward, we should see that list balloon rapidly. Recent additions are big titles you know — DOOM Eternal, Rainbow Six Siege, Fortnite and more, but freshly announced titles like The Ascent, The Persistence Enhanced, and whatever surprise “And you can play it right….NOW” announcements we’ll hear at E3 will benefit from the massive boost AI can bring, and it makes the holiday look even brighter.

There is a revision I need to make on my previous assessments on the 30-series cards. I had previously complained about the dedicated VR port that was removed from the 20-series RTX cards. With the freshly released HTC Vive Pro 2 requiring a hard-wired DVI port to function at full resolution and refresh rate, this port becomes irrelevant. NVIDIA must have known this going into development, planning ahead with their card design.

Speaking of VR, NVIDIA has made a recent advancement that is absolutely huge — adding DLSS support. It’s a short list of games so far, but there is no doubt that it’s a huge improvement for games like No Man’s Sky and Everspace 2. This list will inevitably grow, and as a VR junkie myself, I’m excited for the free framerate improvements this tech brings. When the difference between 80 and 100fps can be crippling nausea, I’m all for it.

PRICE TO PERFORMANCE:

There are no bones about it, cards like the Titan, the 2080 Ti, and the 3090 are expensive. They represent the approach of not worrying about whether they should and simply whether they could. I love that kind of crazy as it really pushes the envelope of technology and innovation. NVIDIA held an 81% market share for the GPU market last year, and they easily could have sat back and iterated on the 2080’s power and delivered a lower cost version with a few new bells and whistles attached. That’s not what they did. They owned the field and still came in with a brand new card that blew their own previous models out of the water. The GeForce RTX 3070 has more power than the 2080 Ti and it costs $500 versus the $1200+ you’ll fork out to get your hands on the previous generation’s king. Similarly, the RTX 3070 Ti and RTX 3080 Ti eclipses everything on the market, even their Titan RTX, and at $599 and $699 respectively, they do so in a fashion that beats the previous generation and takes its lunch money. We haven’t seen a generational leap like this, maybe ever. The fact that NVIDIA priced it the way they did makes me think they had a reason, and I don’t think that reason is AMD.

Sure, I’m certain the green team is worried about how the new generation of consoles could impact their market, but as someone who has worked in tech for a very long time, there can be another reason. When you go to a theme park there are signs that say “You must be this tall to ride this ride,” they are there for your safety. But safety is rarely fun or exciting. We’ve been supporting old technology like mechanical hard drives and outdated APIs for a very long time. Windows 10 came out five years ago, but there are still plenty of folks who want to use Windows 7. Not pushing the envelope stifles innovation, and it stops us from realizing the things we could achieve. By occasionally raising that “this tall” bar to introduce a new day and a new way, we send a message to consumers that it’s time to upgrade, and we send a strong signal to developers that they can push their own envelopes. That’s how we get games like Cyberpunk 2077, and it’s how we see lighting like we do in Watch Dogs: Legion. It’s what takes us from this, to this. There’s no better time to embrace the future than right now, and at a price to performance value that has seemed impossible, the RTX 3070 Ti can be a sweet price point between value cards like the 3060 and top-tier monsters like the 3080 Ti.