As I mentioned in my RTX 4070 review, there has been a shift towards newer generations of cards, with the RTX 3060 now taking the lead spot on Steam’s GPU chart. I also declared that the RTX 4070 was the new Midtier king, pointing to its fantastic rasterized and DLSS-powered results. Now we’ve gotten our hands on a contender for the entry level crown – the RTX 4060 Ti. Powered by an Ada chipset, and bringing with it DLSS 3, next generation Tensor and RT cores, expanded L2 cache, and more, could it be the new entry level card to get the 1080p crowd to jump up a level? Time to throw it in our test rig to find out.

Like the last few cards we’ve reviewed, the RTX 4060 Ti is back to taking up just two slots in your machine. This means it will fit nicely into a far smaller case than some of its bigger brothers. It features the same passthru fin design that we’ve seen on all of the Founder’s Edition cards from NVIDIA, which has proven to be exceptional for cooling and airflow. The only other major difference physically is that they’ve moved from a darker gray to a chrome trim for this card. It also slims back to a single power connector. Beyond these minor differences, we’ll need to get into measurements to find the differentiators.

As I mentioned, the RTX 4070 and 4070 Ti are the midtier go-to recommendations for the 1440p crowd, though in many cases those cards are able to handle 4K nicely as well. The RTX 4060 Ti (and obviously the 4060) will be more focused on the 1080p segment, though again it’ll handle 1440p in many cases. We’ll showcase both 1080p and 1440p here, though as I point out in all of my reviews, remember that reviews are a point in time, and driver maturity and game patches are likely to push numbers higher. In fact, if you look at old reviews you may find that the numbers are lower than when you look six to ten months later – that’s to be expected. Instead, focus less on the number and more on the delta between them. It’ll be more indicative of performance than pure numbers, though even that difference can obviously grow.

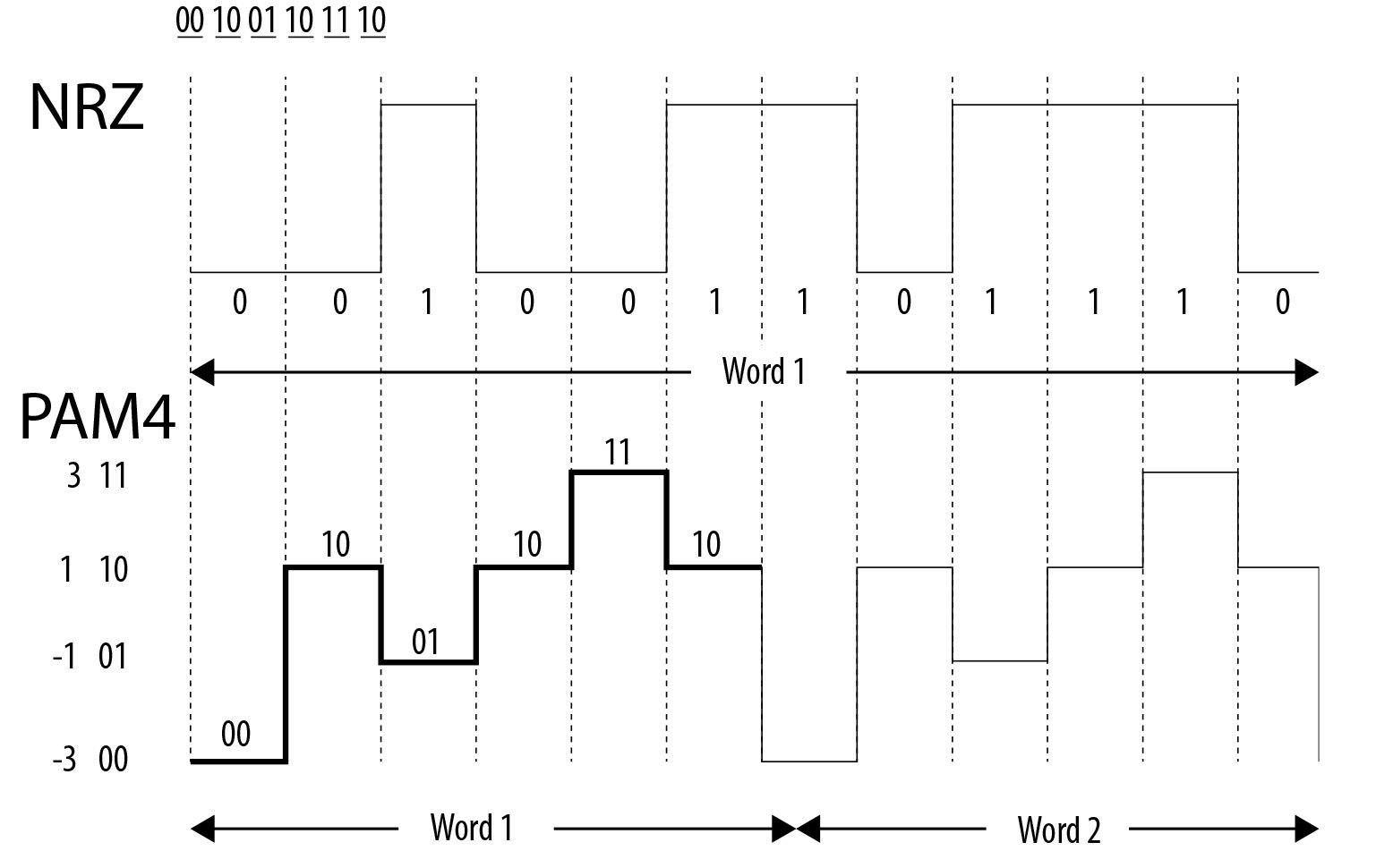

Obviously NVIDIA can’t just keep slimming their CUDA cores and memory to make different card variants, at some point they’ll have to adjust other things. The RTX 4060 Ti marks a shift in memory architecture back from GDDR6X to GDDR6. To explain what the difference is, we are going to have to have a brief conversation about modulation.

GDDR6 uses a modulation of highs and lows to indicate a 1 or a 0, or positive and negative voltage values. With this it can pass a single binary digit (or bit) of data per clock pulse – again, a 1 or a 0 value. This is called an NRZ, or Non-Return-to-Zero modulation type, swinging between +5 volts and -5 volts. GDDR6X is a big breakthrough, allowing four distinct power levels to be modulated. This means it can send TWO bits at a time – 00, 01, 10, and 11. It does this with pulse amplitude modulation, or PAM-4. Instead of a swing between + and – 5V, it uses four values – +3V, +1V, -1V, and -3V. Why does this matter you ask? Well, I’m glad you asked! By being able to encode twice as many bits, you can pass twice as much information per “trip to the well”.

Another area where NVIDIA has invested is in the L2 cache. The L2 cache is responsible for handling cache activities for local and common data sets. This can be shader functions, a common computed vertex, a mesh, or any number of things that the GPU might want access to quickly and frequently. Given that this is a direct pipeline to the GPU itself, it will always be faster than exiting the card, crossing the bus, and hitting the memory pipeline. The L2 cache on the RTX 3070 Ti was a paltry 4MB. The 4060 Ti, just like the RTX 4070, arrives with a whopping 32MB. While this doesn’t sound like a lot, realize that a relatively massive increase in overall space can make a real difference in performance at runtime. The reason being is simple – when a GPU executes literally thousands of mathematical calculations, it often does so with the same instructions over and over. Having that instruction set at the ready to re-use for all of them is only ever a good thing.

There’s an emerging trend among games – a rising need for VRAM. We cited that in several reviews over the last two generations when cards shipped with less than 12GB of video memory. Naturally, increasing resolution is going to soak up quite a bit more VRAM, as will pushing levels of detail settings. Game engines can be optimized, and there are a number of techniques to help with keeping resource utilization low, though that’s not something you can rely on. Asset culling, mipmapping, garbage collection, and other techniques can help with controlling VRAM usage, but running at higher resolutions and pre-loading textures can soak it up like a sponge. As a game developer, I’m going to use every ounce of power available to me, and why shouldn’t I? There are minimum requirements, and recommended, but everything after that is gravy. But what happens when the pool runs low?

When your card runs out of VRAM, you’ll need to make use of your system RAM to fill the gap. Rather than processing complex calculations, lighting, loading textures, or whatever else within the card, instead it’ll have to strike out across the motherboard’s PCIe lane to your CPU and memory. Though PCIe 5 is reaching amazing speed, and RAM speeds are shooting ever higher, it’s still going to be slower than running everything locally. If a swap file is needed, it also means a trip to your local storage method (NVMe, hopefully), further slowing things.

It’s important to note that the RTX 4060 Ti comes in two different configurations – 8GB of VRAM and 16GB of VRAM, with a $100 price difference between them. We’ve criticized cards before for shipping at less than 10GB, especially when aimed at 1440p and beyond. The RTX 4060 Ti is aimed at 1080p, so most often during testing I was at around 6GB of 8 being used at that resolution. While the 4000 series of cards has been more generous with VRAM, with the RTX 4060 Ti having 8GB of GDDR6 memory, you will be bumping up against that upper value with more complex features being enabled. This is probably an area where NVIDIA could have split the difference with a 10GB or 12GB card and a $50 markup and not shipped two SKUs. NVIDIA has put together an article on VRAM usage that digs into the subject beyond what we’ll be doing here. Before we dig into the results, let’s take a look at the rest of what’s under the hood, and what those components do.

Cuda Cores:

Back in 2007, NVIDIA introduced a parallel processing core unit called the CUDA, or Compute Unified Device Architecture. In the simplest of terms, these CUDA cores can process and stream graphics. The data is pushed through SMs, or Streaming Multiprocessors, which feed the CUDA cores in parallel through the cache memory. As the CUDA cores receive this mountain of data, they individually grab and process each instruction and divide it up amongst themselves. The RTX 2080 Ti, as powerful as it was, had 4,352 cores. The GeForce RTX 4070 arrived with 5,888 CUDA cores, with the RTX 4060 Ti getting a slight haircut at 4352. It’d be easy to point at the 2080 Ti and say “same cores, same performance” but that’d be wildly inaccurate. The RTX 2080 Ti could deliver 26.9 TFlops of floating point performance, where the current-generation cores in the RTX 4060 Ti comes in at 22 FP32 Shader TFLOPS of power. What gives, right? Well, we are comparing a flagship card that shipped for $999 at launch (2080 Ti) with a card that retails for $399. Hardly a fair comparison, but one that bears distinction as it showcases just how far we’ve come in terms of power for performance. If we compare it to the RTX 3060 Ti (also a $399 launch price) it puts that card to shame at just 16 TFlops of compute and shading power, even with slightly more cores (4864 for the 3060 Ti).

Since the launch of the 4000 series of cards we’ve seen time and again that this newest iteration of technologies combined are resulting in a staggering framerate improvement when combined with the latest DLSS technology. As we have this entire generation, we’ll be showcasing the raw power of the card, as well as the effect of DLSS on framerate. Let’s talk about components before we get into those benchmarks.

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture – the Tensor Core. This technology from NVIDIA had seen wider use in high performance supercomputing and data centers before finally arriving on consumer-focused cards with the latter and more powerful 2000 series cards. Now, with the RTX 40-series, we have the fourth generation of these processors. For frame of reference, the 2080 Ti had 240 second-gen Tensor cores, the 3080 Ti provided 320, with the 3090 shipping with 328. The new 4090 ships with 544 Tensor Cores, the RTX 4080 has 304, the RTX 4070 has 184, and now the 4060 Ti has 126 4th generation Tensor Cores. Using that 3060 Ti for comparison, that card had 152 of them, but obviously of the prior generation. Let’s put a pin in that thought until we perform some measurements. So what does a Tensor core do?

Put simply, Tensor cores are your deep learning / AI / Neural Net processors, and they are the power behind technologies like DLSS. The RTX 4000 series cards bring with them DLSS 3, a generational leap over what we saw with the 3000 series cards, and is largely responsible for the massive framerate uplift claims that NVIDIA has made. We’ll be testing that to see how much of the improvements are a result of DLSS and how much is the raw power of the new hardware. This is important, as not every game supports DLSS, but that may be changing.

DLSS 3

One of the things DLSS 1 and 2 suffered from was adoption. NVIDIA’s neural network would ingest the data and make decisions on what to do with the next frame, most often the results were fantastic. 2.0 brought cleaner images that could actually be better than the original source. Still, adoption at the game level would be needed. Some companies really embraced NVIDIA’s work, and we got beautiful visuals from games like Metro: Exodus, Shadow of the Tomb Raider, Control, Deathloop, Ghostwire: Tokyo, Dying Light 2: Stay Human, Far Cry 6, and Cyberpunk 2077. Upcoming games like Diablo IV, alongside recently released titles including Redfall, Dead Space, Gripper, and Forza Horizon 5 also get the DLSS 3 treatment. NVIDIA worked diligently to build in engine-level adoption for DLSS 2.0, and now DLSS 3, the speed of adoption is even faster..

DLSS 3 is a completely exclusive feature of the 4000 series cards. Prior generations of cards will undoubtedly fall back to DLSS 2.0 as the advanced cores (namely the 4th-Gen Tensor Cores and the new Optical Flow Accelerator) that are contained exclusively on 4000-series cards are needed for this fresh installment in DLSS. Here’s a quick video of what games support DLSS 3 already as well as a few on the horizon (You might pause at the end…it’s a long list):

To date there are over 300 games that utilize DLSS 2.0, and just shy of 100 games already supporting DLSS 3 that have been announced or coming in the very near future. More importantly, they are natively supporting the Frostbite Engine (The Madden series, Battlefield series, Need for Speed Unbound, the Dead Space remake, etc.), Unity (Fall Guys, Among Us, Cuphead, Genshin Impact, Pokemon Go, Ori and the Will of the Wisps, Beat Saber), and Unreal Engines 4 and 5 (the next Witcher game, Warhammer 40,000: Darktide, Hogwarts Legacy, Loopmancer, Atomic Heart, and hundreds of other unannounced games). While it may be a bummer to hear that DLSS 3 is exclusive to the 4000 series of cards, there is light at the end of the tunnel – DLSS 3 is now supported at the engine level by both Epic’s Unreal Engines 4 and 5, as well as Unity, covering the vast majority of all games being released in the indefinite future. Unreal went a step further with Unreal Engine 5.2, making DLSS 3 a simple plugin. I don’t know what additional work has to be done by developers, but having it available at a deeper level should grease the skids. With native engine support, we’ve already seen the list double in the last 30 days, and I can only imagine that number continuing on a vertical climb in the future.

If you are unfamiliar with DLSS, it stands for Deep Learning Super Sampling, and it’s accomplished by what the name breakdown suggests. AI-driven deep learning computers will take a frame from a game, analyze it, and supersample (that is to say, raise the resolution) while sharpening and accelerating it. DLSS 1.0 and 2.0 relied on a technique where a frame is analyzed and the next frame is then projected, and the whole process continues to leap back and forth like this the entire time you are playing. DLSS 3 no longer needs these frames, instead using the new Optical Multi Frame Generation for the creation of entirely new frames. This means it is no longer just adding more pixels, but instead constructing whole new additional AI frames in between the original frames to do it faster and cleaner.

A peek under the hood of DLSS 3 shows that the AI behind the technology is actually reconstructing ¾ of the first frame, the entirety of the second frame, and then ¾ of the third, and the entirety of the fourth, and so on. Using these four frames, alongside data from the optical flow field from the Optical Flow Accelerator, allows DLSS 3 to predict the next frame based on where any objects in the scene are, as well as where they are going. This approach generates 7/8ths of a scene using only 1/8th of the pixels, and the predictive algorithm does so in a way that is almost undetectable to the human eye. Lights, shadows, particle effects, reflections, light bounce – all of it is calculated this way, resulting in the staggering improvements I’ll be showing you in the benchmarking section of this review.

The launch of DLSS 1, 2, and 3 all carried some oddities with image quality. Odd artifacting and ghosting, shimmering around the edges, shimmering around UI elements, and other visual hiccups hit some games harder than others. More recent patches have fixed much of the visual issues, though flickering UI elements can still appear when frame generation is enabled. This mostly occurs when there is a text box or UI element that is in motion, such as a tag above a racer’s head, or text subtitles above a character. I saw this fairly extensively in Atomic Heart, though it was fixed in Cyberpunk 2077 – your mileage may vary, clearly. Thankfully this is becoming less and less of an issue through game patches and driver updates (with both of the aforementioned titles being fixed at this point), though most of this relies on the developer more than NVIDIA – you gotta patch your games, folks.

DLSS 3 is actually the culmination of DLSS 2 (DLSS Super Resolution), Reflex (their tech to alleviate latency caused by DLSS), and DLSS Frame Generation. As such, we see this being implemented in a few ways, with some developers allowing a user to turn on Frame Generation with Reflex, for example, without also applying Super Resolution. This gives the player more choices in how they impact latency, image quality, and framerate technologies independently. Hogwarts Legacy and Hitman III come to mind, as both of them will utilize several DLSS technologies in tandem to provide more nuanced choice (though the latter just got a major update to support Frame Generation as well). If you want to get into a bit of tinkering, you can occasionally fix issues by replacing the .dll for DLSS, though this should fall to the developer, not gamers to fix. As I mentioned, your mileage may vary, but you’ll probably be busy enjoying having a stupidly high framerate and resolution to notice.

Boost Clock:

The boost clock is hardly a new concept, going all the way back to the GeForce GTX 600 series, but it’s a very necessary part of wringing every frame out of your card. Essentially, the base clock is the “stock” running speed of your card that you can expect at any given time no matter the circumstances. The boost clock, on the other hand, allows the speed to be adjusted dynamically by the GPU, pushing beyond this base clock if additional power is available for use. The RTX 3080 Ti had a boost clock of 1.66GHz, with a handful of 3rd party cards sporting overclocked speeds in the 1.8GHz range. The RTX 4090 ships with a boost clock of 2.52GHz, and the 4080 is not far behind it at 2.4GHz. The RTX 4070 has a boost clock of 2.475 GHz, and the RTX 4060 Ti isn’t too far off that number at 2.535 GHz. It’s becoming clear that this minor change in boost clock isn’t the big differentiator it once was.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources that intersect with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity. With RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The RT core is the engine behind your realtime lighting processing. When you hear about “shader processing power”, this is what they are talking about. Again, and for comparison, the RTX 3080 Ti was capable of 34.10 TFLOPS of light and shadow processing, with the 3090 putting in 35.58. The RTX 4090? 82.58 TFLOPS. The 4080 brought 64 TFLOPS, with the 4070 reporting in at 46 RT cores providing 29 TFLOPS of FP32 shading power. As we mentioned earlier, the 4060 Ti comes in at 22 TFLOPS of FP32 shading power. The closest card to that value is actually the RTX 3070 Ti which shipped at 21.75 TFLOPS, and at a launch MSRP of $639.99.

Benchmarks:

Given that this is an entry level card, this GPU is best suited for 1080p resolution, with the occasional pop up to 1440p. With DLSS 3, you’ll see some serious frame rate improvements, so it’s worthwhile spending some time looking at both. As such, we’ve benchmarked everything at 1080p and 1440p, as well as with and without DLSS. Let’s get into the numbers:

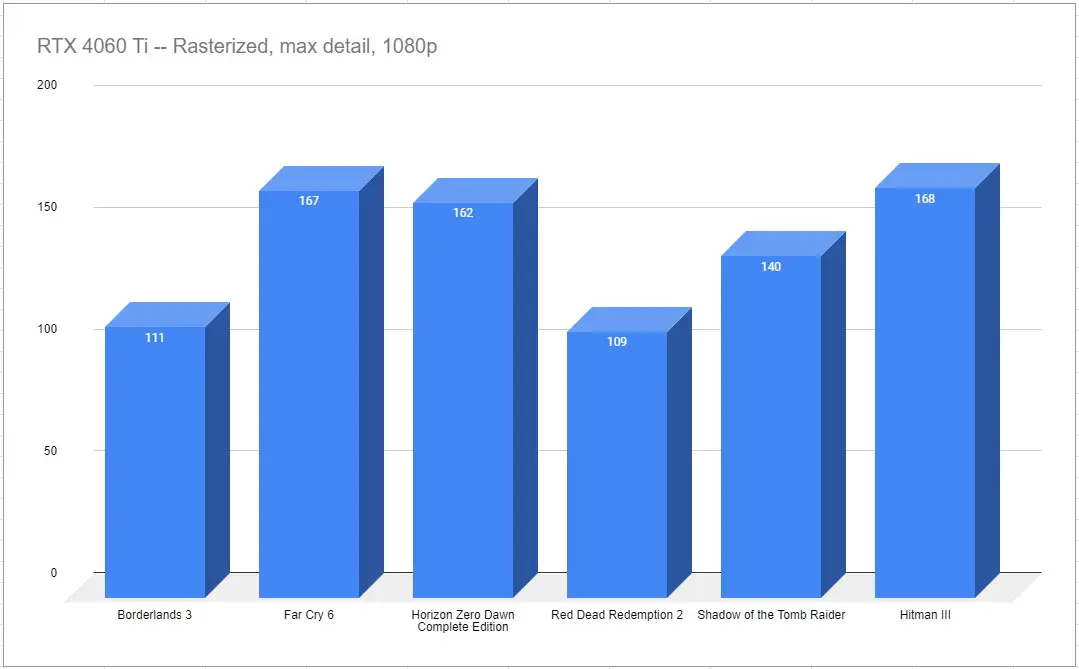

As usual, I wanted to start with rasterized performance. That is to say, without the aid of any upsampling or frame generation – a measurement of raw power. These games are a few years old at this point but don’t think they are anything less than eyebrow-raisingly beautiful. Across the board we see values that’ll push a 144Hz or 120Hz monitor, with silky smooth framerates over 100fps.

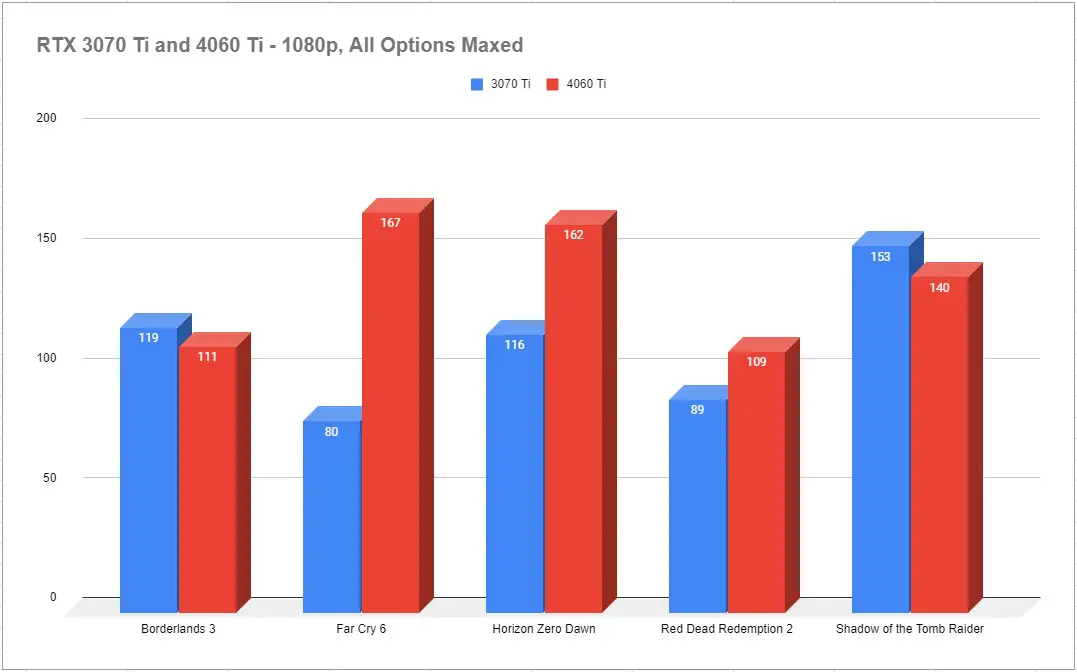

Now that we’ve done the math and found this card to be equivalent to an RTX 3070 Ti, let’s take a look at how those two compare on some of these same titles:

Here we see the RTX 4060 Ti, a $399 card, competing head to head against the RTX 3070 Ti, a $649 card, and delivering the goods. It shows in a single graph the effect of that generational leap, even with the reduction in cores. Let’s take a look at DLSS enabled titles.

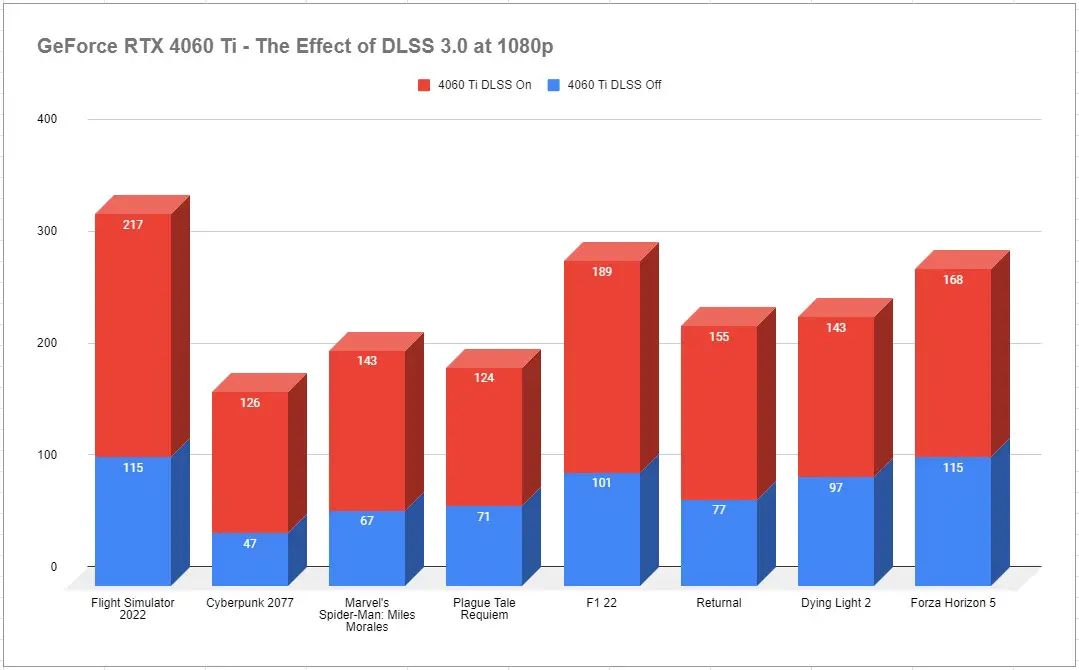

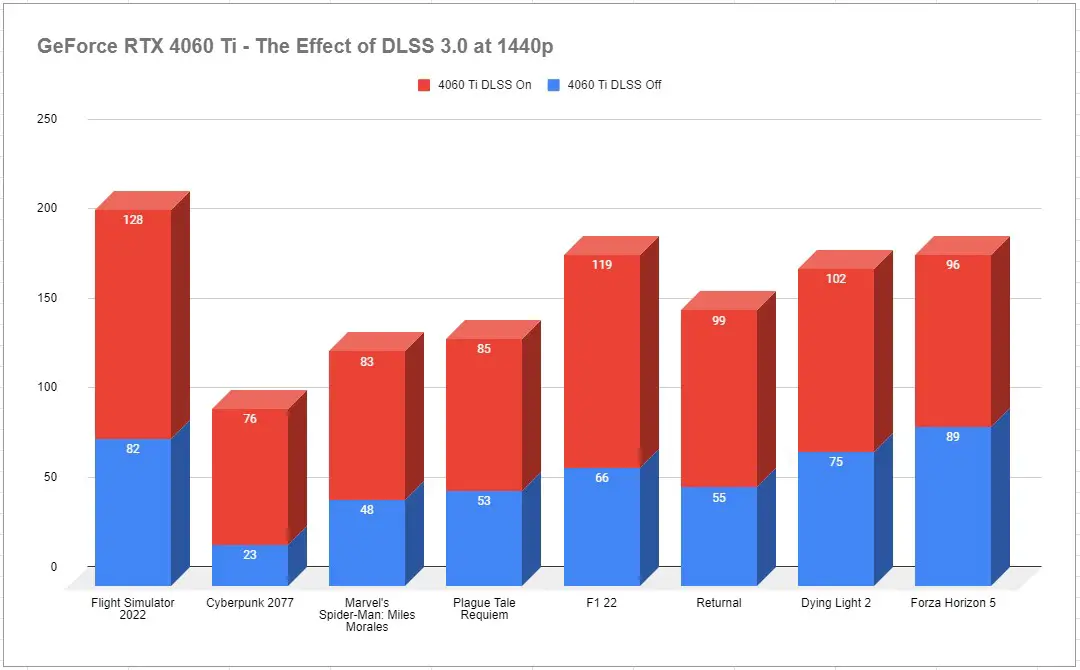

This stack shows the raw numbers of the current crop of DLSS 3 enabled titles, including newcomer Forza Horizon 5. None of the rasterized numbers are anything to cry about, but when you enable DLSS 3 it’s an absolute game changer. We see NVIDIA backing up their claim, and in some cases well beyond – DLSS can literally double framerate in a lot of titles. Obviously some games benefit more than others, but it’s clear that DLSS 3 can fill the gap. In games like Returnal, where sub-split-second timing is crucial to survival, frames make games, and the RTX 4060 Ti is able to deliver it. It does add a tiny bit of latency into the mix, but with their included Reflex plus Boost tech turned on, it’s either imperceptible or in some cases, even better than “stock”.

While the card isn’t aimed at the 1440p market, it’s important to know that the card can stretch its legs. Let’s look into those numbers, again with and without DLSS, and see if there’s enough horsepower in the RTX 4060 Ti.

It’s clear that without DLSS 3, Cyberpunk 2077 is almost unplayable at max settings without DLSS, but with it, it’s not only playable but in that 60+fps butter zone. That trend continues across the board, with Flight Simulator and F1 2022 showing the biggest improvement. Forza Horizon 5 sees a huge improvement, which is no surprise as it’s the most current title on the list, and thus has the most time for maturity. This extra bit of slack will allow players to mess with the slider on DLSS a bit, either moving it closer to Ultra Performance for more frames, or Quality for a cleaner look.

To be clear, the early implementations of DLSS 3 had a few hiccups. You could see ghosting or letters/numbers in UI elements being mangled. There are techniques to upsample everything except the UI now, meaning that problem is all but gone. Similarly, the ghosting effect has begun to disappear as the drivers mature and developers implement it more effectively. We are back to the “it’s free frames” territory, as the wildly increased frame rate is worth the squeeze of the occasional graphical hiccup, if you see it at all.

Pricing:

We’ve made this point a few times, but at the risk of belaboring it, this card ships at $399 but delivers performance equivalent to a card that retailed at $649 at launch. A quick look at Amazon shows those RTX 3070 Ti cards still retailing for $549, making it downright dumb to buy it over the newer card. When you factor in that DLSS 3, AV1 encoding, improvements to AI upsampling, and all of the other myriad improvements exclusive to this 4000 series of cards, but with a current warranty to boot, it sounds like an easy decision.

GeForce RTX 4060 Ti

Excellent

A 1080p card that can punch to 1440p with the help of DLSS, the GeForce RTX 4060 Ti delivers the performance of a $650 card for a $399 price, and it does so with all of the advantages of the 4000 series of NVIDIA GPUs. While it’s light on memory for the 8GB configuration, it represents tomorrow’s tech at today’s price.

Pros

- Power equivalent of a $649 card at a $399 price

- Performs well in rasterized tests at 1080p

- Solid 1440p performance with DLSS 2.0 or 3

- All of the advantages granted by Ada chipset

Cons

- 8GB of GDDR6 VRAM feels too light

- Not as much head room as the 4070 or 4070 Ti