The sun rises in the east and sets in the west, fire is hot and water is wet, and there’s a 100% chance that if there’s a 3080, there will be a 3080 Ti. Well, here we are. Freshly announced at the Computex Keynote, the RTX 3080 Ti represents the top of the upgrade cycle for consumer-grade cards. While it’s likely we’ll see other cards and chips from the 30 series using the same tech, it’s time to unpack the most powerful card on the market today and see what the Titanium version (hence the Ti designation) of the 3080 brings you.

Before we get into the weeds with the RTX 3080 Ti, I want to go over some of the hardware that drives the card. It’ll help with context when comparing the card against others in the 30 series lineup, and it should explain why numbers aren’t always the whole story. I’ll try to explain them succinctly and in layman’s terms so you don’t have to have an electronics engineering degree to understand them. Suffice it to say that some nuance may be lost in the summation, but the general idea is correct. If you disagree, hit me up on Discord — I love to talk shop.

Cuda Cores:

Back in 2007, NVIDIA introduced a parallel processing core unit called the CUDA, or Compute Unified Device Architecture. In the simplest of terms, these CUDA cores can process and stream graphics. The data is pushed through SMs, or Streaming Multiprocessors, which feed the CUDA cores in parallel through the cache memory. As the CUDA cores receive this mountain of data, they individually grab and process each instruction and divide it up amongst themselves. The RTX 2080 Ti, as powerful as it is, has 4,352 cores, and the GeForce RTX 3080 has 8,704 of them. The RTX 3080 Ti ups the ante with a whopping 10240 CUDA cores — just 200 shy of the behemoth 3090! Now, understand that more CUDA cores doesn’t always mean faster, but we’ll get into the other elements that explain why in this case it very much does.

Boost Clock:

The boost clock is hardly a new concept, being introduced with the GeForce GTX 600 series, but it’s a very necessary part of wringing every frame out of your card. Essentially, the base clock is the “stock” running speed of your card that you can expect at any given time no matter the circumstances. The boost clock on the other hand allows the speed to be adjusted dynamically by the GPU, pushing beyond this base clock if additional power is available for use. This dynamic adjustment is done constantly, allowing the card to push to higher frequencies as long as the temperatures remain within tolerance thresholds. With better cooling solutions (more on that later), the GeForce RTX 30-series is capable of driving far higher boost clock speeds. What surprised me here, though, is that the RTX 3080 Ti has a boost clock of 1665 — an overall reduction over the RTX 3080’s clock of 1710 MHz. That’s still a massive improvement over the 2080 Ti’s speed of 1545 Mhz, but we’ll have to see what effect that has on benchmarks. Since we’ve already seen a card or two from 3rd party manufacturers with clock speeds of 1830 MHz, it’s clear there is plenty of headroom to overclock.

GDDR6 vs. GDDR6X:

You may or may not have noticed, but there is a very subtle difference between the 2080 Ti, 3070, and the 3080, 3080 Ti, and 3090 cards. The former three use GDDR6 memory — the standard for video memory which is also used in both upcoming console platforms. On the other hand, the RTX 3080, 3080 Ti, and 3090 all use GDDR6X. What’s the difference? Well, the 2080 Ti is capable of pushing 616.0 GB/s though its memory pipeline — a fairly staggering amount, but when textures can be massive at 4K or even 8K resolutions, a very necessary rate. The GeForce RTX 3080 delivers 760 GB/s of memory bandwidth, but the RTX 3080 Ti ups that to an eye-watering 912 GB/s — just shy of the 3090’s 936 GB/s. The RTX 3080 may have clocked in at 10 GB of GDDR6X, but some games are looking for a little more headroom, such as Resident Evil: Village. While these are still just reservations, not utilization, having a little more headroom is always nice. The RTX 3080 Ti pushes the number to 12GB of the high-density memory, but more is not the story here — it’s what Micron has done with memory architecture that has more of an impact on performance.

Binary is what computers speak — 1s and 0s. Sending electrical signals across a transistor to indicate on or off states, we can create all sorts of things from programs to graphics to digital sound. Well, Micron asked what would happen if we could more accurately measure the state of that electrical charge and push not just on and off, but four states through the pipeline? GDDR6X is the answer to that question as it does exactly that. This has never been done before with memory, and since memory forms the pathways for all that a GPU does, this has impacts across the entire platform. Whether you are doing video editing, gaming, or letting your video card do AI work like DLSS, being able to hold twice as many instructions per bite at the apple is phenomenal. For those of you interested in the math behind the scenes, GDDR6X’s four states (PAM4 or Four Pulse Amplitude Modulation) are 00, 01, 11, and 10, allowing the increase of memory width without increasing power or heat a significant amount, or reduction of reliable detection of state. This is one of the major secret sauce items that drive power in the higher end cards from NVIDIA.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources intersecting with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity — with RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The Turing architecture cards (the 20X0 series) were the first implementations of this dedicated RT core technology. The 2080 Ti had 72 RT cores, delivering 26.9 Teraflops of throughput, whereas the RTX 3080 has 68 2nd-gen RT cores, offering up 29.77 TFLOPS of power. The RTX 3080 Ti pushes this to 80 RT cores — just shy of the 82 cores on the RTX 3090. With double the throughput of the Turing-based versions of the previous generation, the RTX 3080 Ti is capable of delivering 34.10 TFLOPS of light and shadow processing power, all while running concurrent ray tracing, shading, and compute. This isn’t back of the envelope marketing nonsense — the 3080 Ti is capable of delivering twice the amount of ray and triangle intersection calculations, making realtime lighting and shadows opportunities for developers that much easier to implement to create realistic worlds for us to inhabit. For reference, the RTX 3080 delivers 29.77, and the 3090 puts in 35.58, making the RTX 3080 Ti an absolute monster for realtime lighting and shading.

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture — the Tensor Core. This relatively new technology from NVIDIA had seen wider use in high performance supercomputing or data centers before finally arriving on consumer-focused cards in the latter and more powerful 20X0 series cards. Now, with the RTX 30-series we have the third generation of these processors, and move of them (i.e. the 2080 Ti had 240 second-gen cores, the 3080 has 272 third-gen Tensor cores,with the 3080 Ti providing 320 compared to 328 for the 3090) So, what do they do?

Tensor cores are used for AI-driven learning, and we see this more directly applied to gaming via DLSS, or Deep Learning Super Sampling. More than marketing buzzwords, DLSS can take a few frames, analyze them, and then generate a “perfect frame” by interpreting the results using AI, with help from supercomputers back at NVIDIA HQ. The second pass through the process uses what it learned about aliasing in the first pass and then “fills in” what it believes to be more accurate pixels, resulting in a cleaner image that can be rendered even faster. Amazingly, the results can actually be cleaner than the original image, especially at lower resolutions, and having less to process means more frames can be rendered using the power saved. It’s literally free frames. DLSS 3.0 is still swirling in the wind, but very soon we may see this applied more broadly than it is today. We’ll have to keep our eyes peeled for that one, but when it does release, these Tensor cores are the components to do the work. That’s all fancy, but wouldn’t you rather see it in action? Here’s a quick snippet from some more recent flagship titles that does exactly that.

DLSS 2.0 was introduced in March of 2020, and it took the principals of DLSS and set out to resolve the complaints users and developers had, while improving the speed. To that end, they reengineered the Tensor core pipeline, effectively doubling the speed while still maintaining the image quality of the original, or even sharpening it to the point where it looks better than the source! For the user community, NVIDIA exposed the controls to DLSS, providing three modes to choose from — Performance for maximum framerate, Balanced, and Quality which looks to deliver the best quality final resultant image. Developers saw the biggest boon with DLSS 2.0 as they were given a universal AI training network. Instead of having to train each game and each frame, DLSS 2.0 uses a library of non-game-specific parameters to improve graphics and performance, meaning that the technology could be applied to any game should the developer choose to do so. Game development cycles being what they are, and with the tech only hitting the street earlier this year, it’s likely we’ll see more use of DLSS 2.0 during the holiday blitz, and even more after the turn of the year.

Frametime vs. Framerate:

It’s important to understand that these two terms are not in any way interchangeable. Framerate tells you how many frames are rendered each second, and Frametime tells you how long it took to render each frame. While there is a great deal of focus on framerate and the resolution at which it’s rendered, frametime should likely receive equal if not greater attention. When frames take too long to render they can be dropped or desync, wreaking all sorts of havoc including stuttering. If frame 1 takes 17ms, but frame 2 takes 240ms, that’s going to make for a jittery result. Realize that both are important and don’t become myopically focused on just framerate as it only tells half the story as, even if a device is capable of delivering 144fps, if it does so in an uneven fashion, you’ll see a choppy output.

Analysis:

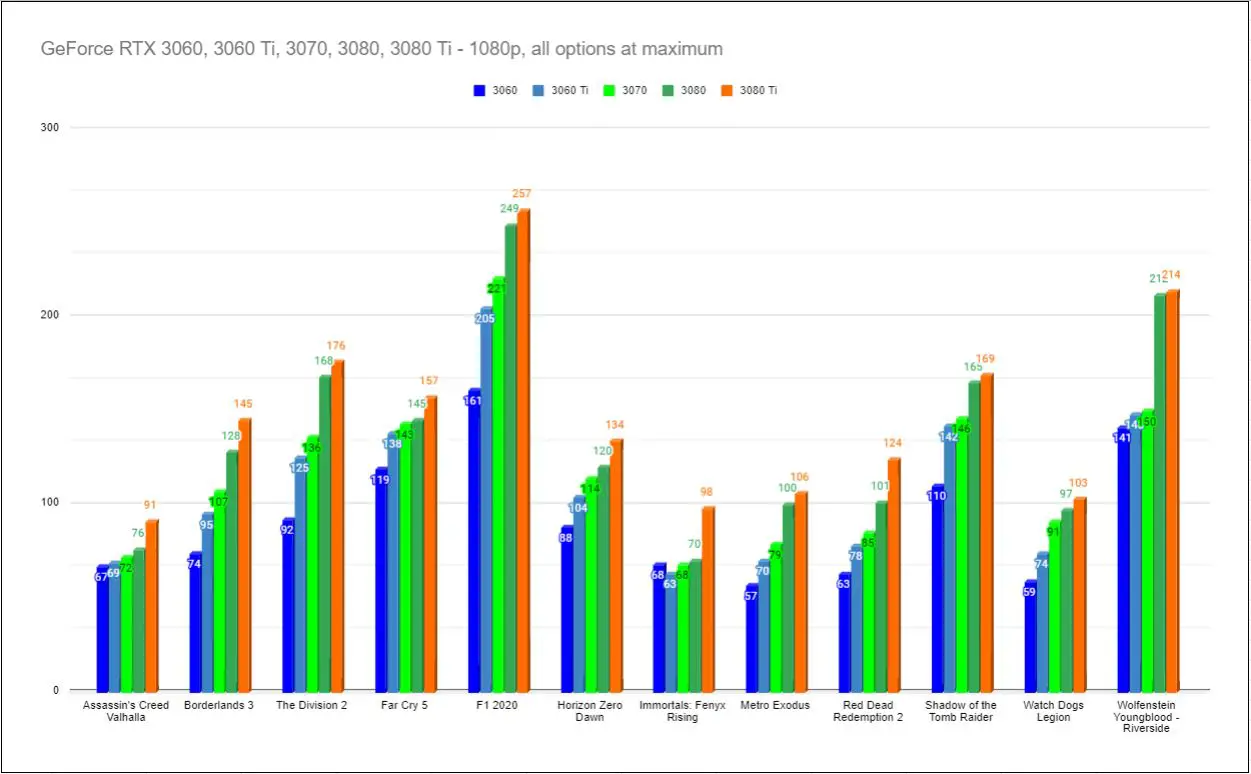

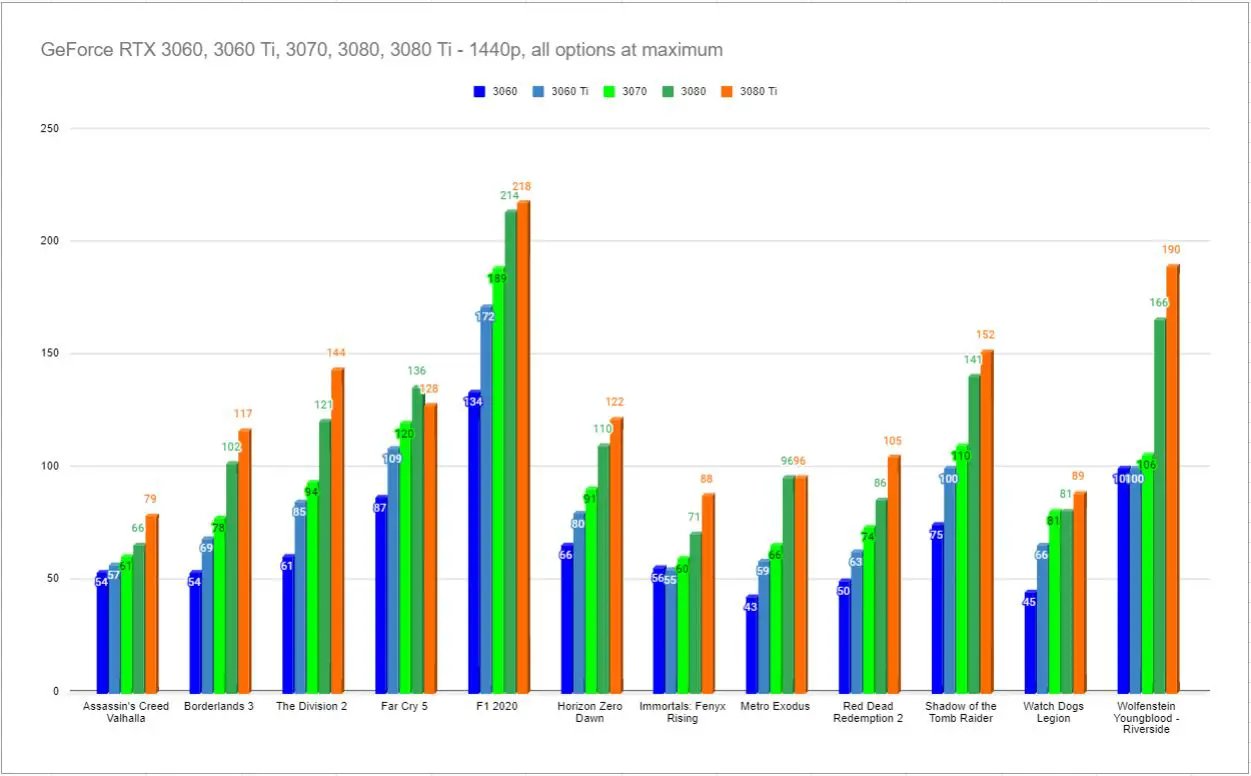

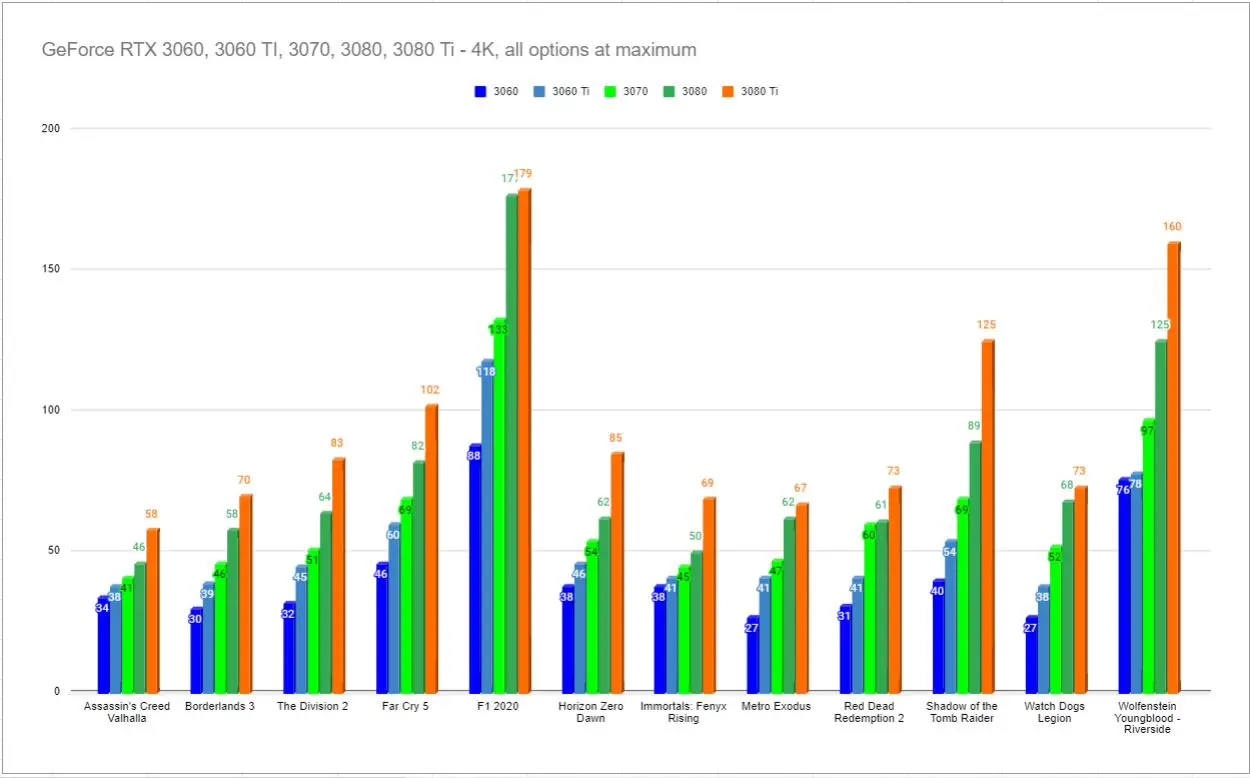

There are a lot of interesting bits of information to glean from these benchmarks. Sure the standouts are the big number jumps, but it also indicates something subtle as well. Games that take advantage of new lighting like RTX instead of performing complex calculations for lighting and shadows see a sizable boost over their contemporaries without that tech. By way of example, Assassin’s Creed Odyssey is notoriously CPU-bound, so throwing more hardware at it will only go so far, but with the 3080 Ti we see a rather significant jump. It seems there was some additional slack to be soaked up with the additional headroom of this new card.

Also interesting in this list is the performance of Red Dead Redemption 2. As you saw in our in-depth look at the PC version, Rockstar’s wild west adventure will bring just about any video card to its knees. Two years of patches and updates have made 4K/60 possible, with the RTX 3080 putting in 61 frames, but the 3080 Ti pushing that to a very comfortable 73 frames per second. Push every slider to the right and enjoy the game as Rockstar intended.

Shadow of the Tomb Raider was one of the first games to take advantage of RTX lighting, and as such has had the most time to refine. Not only is 4K/60 at maximum quality possible, you can hit those numbers with frames to spare on cards with RTX. In fact, we’ve reached a silly 125 frames per second at 4K resolution, pushing into framerates normally reserved for shooters.

Let’s take a second and talk about bells and whistles. Keoken Interactive delivered a stress-inducing lunar adventure with their game Deliver Us the Moon. It’s a good looking game, to be sure, but it is easily the best showcase of why RTX is, without being in any way hyperbolic, a game changer. Watch this video:

With Unreal Engine 5 and Unity both supporting RTX, and over 130 games sporting the tech as of this writing, there’s no doubt that it is rapidly becoming the norm. Combined with DLSS, it’s giving us graphical fidelity like we’ve never seen before, driving immersion with more realistic lighting and reflections. This lets developers focus harder on other areas, letting the tech do the heavy lifting.

Speaking of heavy lifting, we need to talk about processors. If you have anything less than a 10th generation processor, you are likely going to see some level of bottleneck. Put simply, the RTX 3080 Ti (and most of the 30-series cards, frankly) are able of handling more transactions than even the most powerful chips from AMD and Intel. Other devices in your system are also a factor, such as how fast you can transfer information to and from your hard drive. We’ll discuss how NVIDIA is trying to solve this dilemma a little further into this review, but put simply, NVIDIA is once again ahead of the power curve, and processors, memory, and even the PCI bus need to catch up before all this power gets fully utilized, much less taxed.

Cooling and noise:

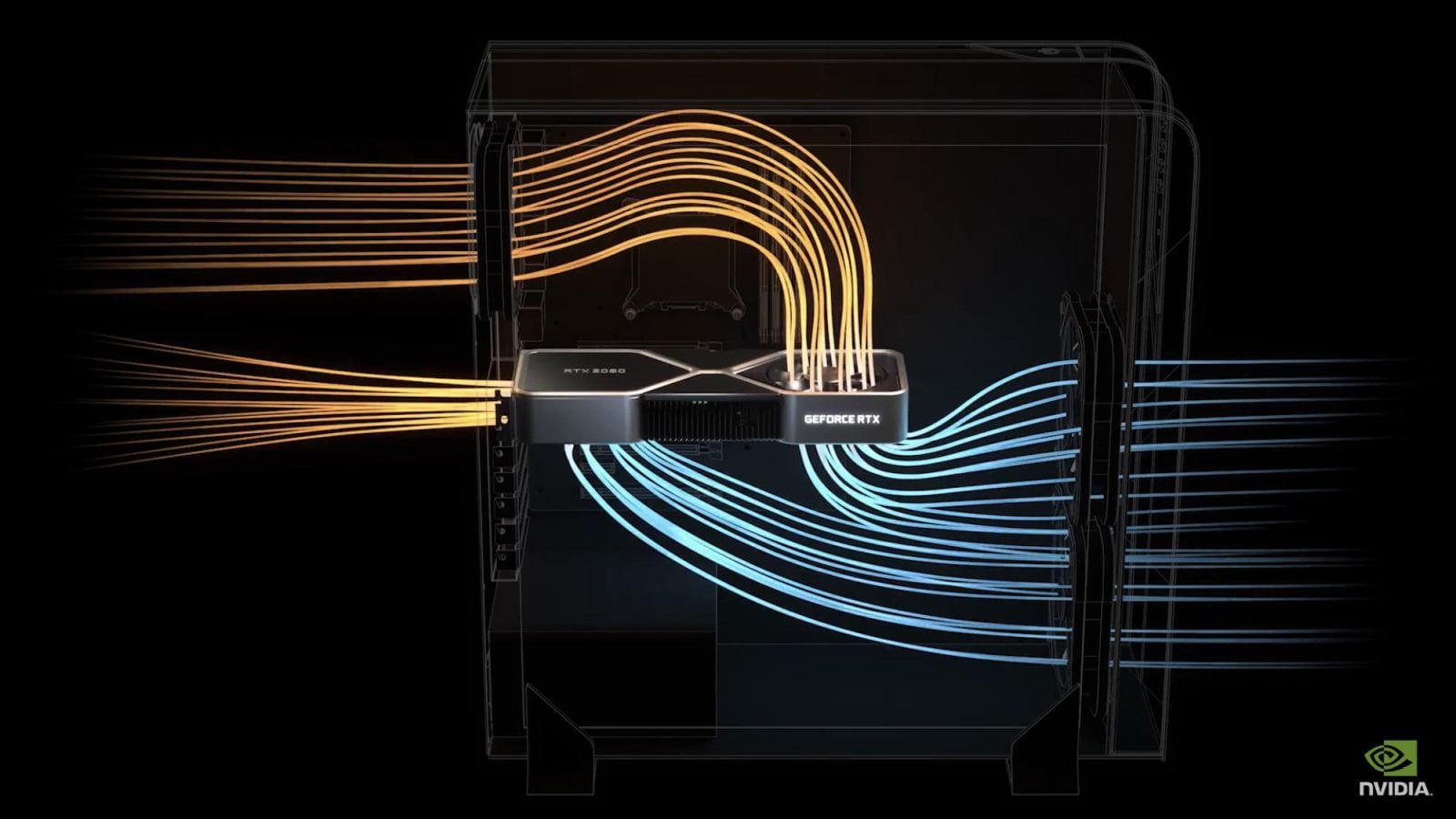

It’s one thing to deliver blisteringly fast frame rates and eye-popping resolutions, but if you do it while also blowing out my eardrums with a high pitched whine as your Harrier Jet-esque fans spin up to cool, we’ve got a problem. Thankfully NVIDIA realized this, as well as the need to cool a staggering 28 billion transistors (the 2080 Ti had 18.9 billion), and they redesigned the 30-series cards to match. The 3080 Ti, like previous cards, has a solid body construction with a new airflow system that displaces heat more efficiently, and somehow does it while being 10dB quieter than its predecessor. I’d normally point to that being a marketing claim, but I measured it myself.

Normal airflow through a case with a standard setup starts with air intake at the front, pushing it over the hard drives, and hopefully out the back. I can tell you that the case I picked up from Coolermaster was incorrectly assembled on arrival with the 200mm fan on top blowing air back into the case, so it’s always best to check the arrows on the side to make sure your case follows this path. The 2080 Ti’s design was that of a solid board that ran the length of the card with fans on the bottom pushing heat away. Unfortunately this requires that it circulates back into the path of the air flow, having to billow back up and then travel out the top and rear case fans.

The RTX 3080 Ti’s airflow system is a thing of beauty. Since the card has been shrunk effectively in half thanks to the 8nm manufacturing process, this left a lot of real estate for a larger cooling block and fins, as well as a fan system to match. The fan on the top of the card (once it is mounted in the case) instead draws air up from the bottom, going through the hybrid vapor chamber heat pipe (as there no longer a long circuit board to obstruct the airflow), and pushes it directly into the path of the normal case air path. The second fan, located on the bottom of the card and closest to the mounting bracket at the back of the case, draws air in as well, but instead of passing it through the card, it pushes the excess heat out of the rear of the card through a dedicated heat pipe vent. Observe:

You can see the results while running benchmarks. The card never pushed above 79 C, just like the RTX 3080, no matter how hard I pushed it or at what resolution I ran it, remaining in the low to mid 70s nearly all the time, and at an amazing 35 C when idle. More than once I’ve peeked into my case and saw that the fans for the card aren’t even spinning — this is one amazing piece of engineering.

Gaming without compromise

The PlayStation 5 and Xbox Series X have both focused on delivering 4K, and developers have dropped 120 fps modes into their games on a few occasions, but there are very few able to do both. Upgrades to already-released titles bear this out, but there’s an underlying issue — HDMI 2.1, your TV, and your receiver. Much like the issue with processors, RAM, and motherboards, all of your components need to match to maximize what your GPU can produce. On a console all of that is self-contained and set in stone, but the problem remains. While both consoles provide support for HDMI 2.1, the newest standard which is capable of delivering up to 8K output, your TV and receiver also have to support these — and very few do. In reality, you likely have a 4K TV which supports 60Hz, if even that. This brings me to the GeForce RTX 3080 Ti.

NVIDIA’s newest series of cards need hardware to match. They support HDMI 2.1, 8K outputs, high refresh rate and high resolution monitors, and they do it while having RTX and DLSS enabled. Seeing DOOM Eternal in 8K is something to behold, but most of us are a long way away from realizing that at home. What we can do, however, is enjoy 4K gaming at 60+ (often WAY past 60) without compromise. Sure, the newest consoles will advertise 4K/60 or even 4K/120, but can they do it with all the sliders to the right? Not so far.

For what it’s worth, seeing Doom Eternal run at 150+fps at 4K, RTX, DLSS, and maxing out the refresh on my 144Hz monitors is the way gaming should be. It’s not just a carefully-crafted tech demo — it’s real, and it’s here right now. Frames make games, and it’s never been more true than in id’s hell shooter.

There is something to note about the GeForce RTX 3080 Ti — the power requirements. All of this insane capability doesn’t come without cost — you’ll need a 750W PSU to bring this beast to life. If you’ve been plugging along on that 550W PSU for years, now’s the time to put some oomph behind your components.

I had previously complained about the loss of a dedicated VR port in the 30-series graphics cards from NVIDIA, but that has all but evaporated. The HTC Vive Pro 2 needs a DisplayPort to take full advantage of the 5K display, and the Oculus Quest 2 is fully wireless at this point. Ultimately this was a nice to have that has turned into something all but unnecessary for the latest hardware.

Price to Performance:

There are no bones about it, cards like the Titan, the 2080 Ti, and the 3090 are expensive. They represent the approach of not worrying about whether they should and simply whether they could. I love that kind of crazy as it really pushes the envelope of technology and innovation. Rather than sit back and enjoy the success of the 3080, they found a sweet spot between the RTX 3080 and the RTX 3090 with the 3080 Ti. This newest card skews far closer to the latter than the former, and it doesn’t require three slots, a beefy power supply, and a second mortgage to use.

The RTX 3080 easily bested anything else on the market at launch, and continues that trend today. Now, a new king is crowned, with the RTX 3080 Ti offering up better than 10-20% improvements across the board. We’ve seen games like Days Gone, Resident Evil Village, Horizon Zero Dawn, Ghostrunner, Control, Microsoft Flight Simulator, and Half-Life Alyx push the envelope on what’s possible, and having hardware that can not only meet that challenge but exceed it lets developers dream of what’s next for gaming. Sony and Microsoft have declared that mechanical hard drives are a thing of the past, Nintendo’s new console will have 4K output, and PC gamers are demanding their games be more lifelike than ever before. NVIDIA has met this challenge with pixel-pushing power that was previously impossible without occupying three slots in your machine. This is what peak performance looks like.