It’s taken NVIDIA a little bit of time to dial in the price part of “price to performance”, or at least that’s what some critics have levied against big green over the course of the 4000 series. As we launch into 2024 we saw the announcement of, and now the release of, three new variants of the RTX 4070 and 4080 – namely, the RTX 4070 Super, RTX 4070 Ti Super, and now the RTX 4080 Super. These cards aren’t mere additions to the lineup, but in some cases a full replacement of the card they share namesake. Today we take a look at the RTX 4080 Super Founder’s Edition. As always, we’ll spend a bit of time looking at the specs and what they do, as well as benchmarks, and then finish up with pricing. Let’s do this!

While I have an appreciation for third-party cards as competition breeds innovation, I’m always happy to slot a card from NVIDIA as I know it’s going to be silent in my case. As I type this I don’t hear the 4080 Super as it has gone to sleep. The pass-thru reference design from NVIDIA is unrivaled in its ability to cool the card (as it should, they spent a great deal of time and money on it), but it’s also the only set of cards I’ve reviewed that has the good sense to power down the fans when the system isn’t doing anything that taxes it. If you’ve built a system that doubles as your work machine, or if you perhaps have your rig in your bedroom, you’ll appreciate that the card will go to sleep when you do.

Acoustics aside, the most obvious comparison we’ll be looking at here is between the RTX 4080 and the RTX 4080 Super. With the new pricing, however, it’s also very much within wallet-nudge distance of the RTX 4070 Ti Super, so we’ll drop that in for fun. As the 3080 Ti continues to be the new leader on Steam’s hardware page, we’ll also drop that in here. It’s worth noting however that the 3080 Ti can only handle rasterized and DLSS 1 and 2, as only the 4000 series supports DLSS 3 and 3.5, including new technologies like AV1 encoding, frame generation, and a whole lot more. It’s important to note that reviews are a point in time, and driver maturity and game patches are likely to push numbers higher. As such, focus less on the number than the delta between them. Or don’t – I’m not your dad.

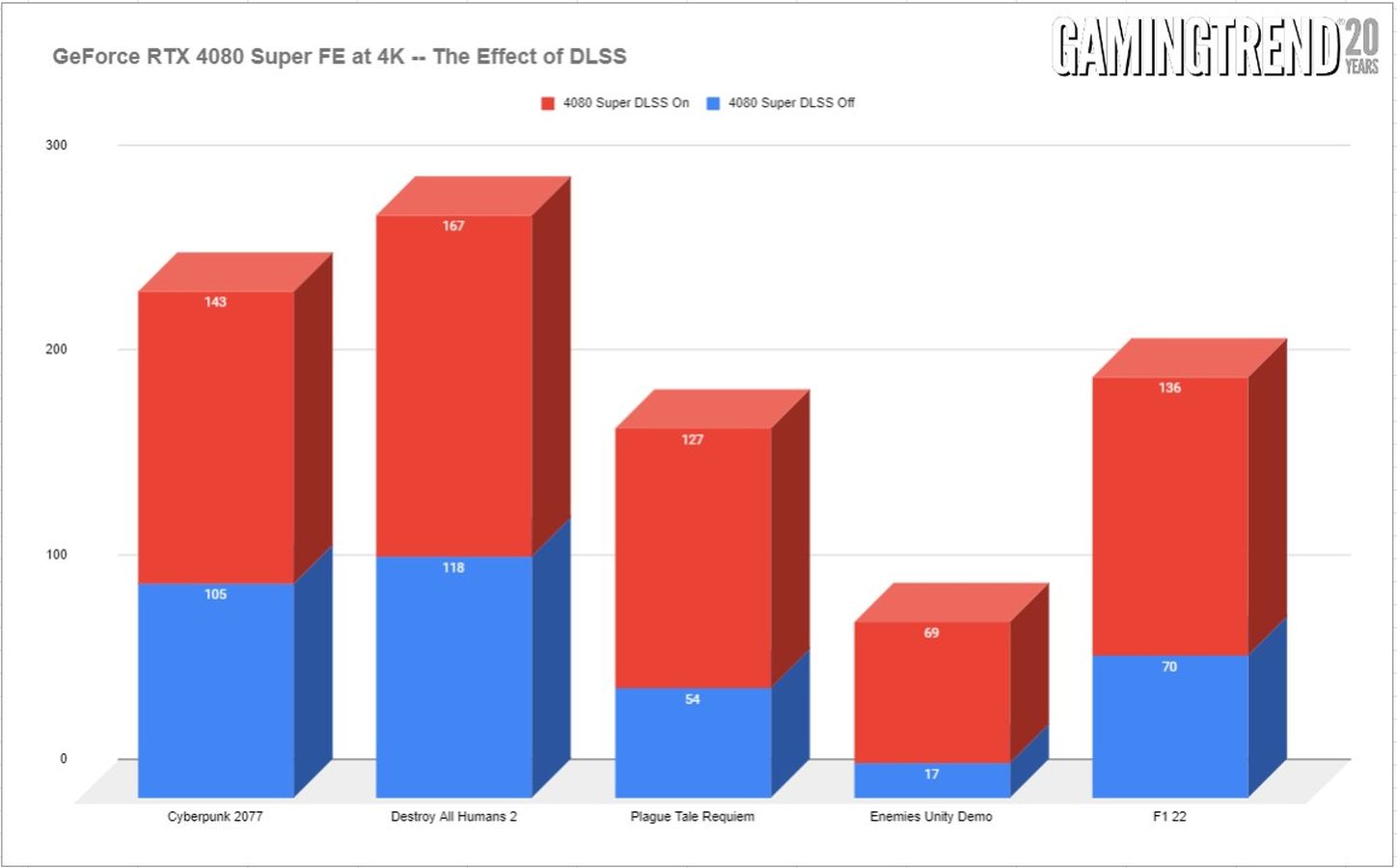

In our early DLSS 3 reviews we focused exclusively on DLSS 2, DLSS 3, and rasterized performance. Now we have a fresh contender in the space – DLSS 3.5 and Frame Generation. All 4000 series cards are capable, and more games are taking full advantage. Moreover, we are seeing the minor artifacting issues becoming less frequent and thankfully less severe. While I’ve long been a proponent of the tech, recent advances are breathing a great deal of life into resolutions and framerate levels otherwise impossible to achieve. In some games that means framerates in the 300s, meaning you can push high-refresh competition-focused monitors to their limit, and not just at 1080p. The additional power in the 4080 Super also means you can push up to 4K and achieve framerates above 100 most of the time.

Let’s look at the testing rig:

The test rig:

Gigabyte Z790 AORUS MASTER

Intel 13900K CPU

32GB DDR5 @ 7300 MT/s

M.2 NVMe @ 7700 GB/s

Asus GeForce RTX 4080 Super FE at stock speeds

A general complaint with the mid-tier cards is that some are well-equipped with VRAM, and others are lacking a bit. At the upper tier, this is a thing of the past. The RTX 4090 shipped with an eyebrow-raising 24GB of GDDR6X RAM, and the new RTX 4080 Super sticks to the 16GB that the 4080 shipped with, and with the same 256-bit pipeline – up from the 192-bit bus in all of the 4070 cards.

Your VRAM pool is necessary for a great many features and functions, and none more so than higher resolutions. Quality settings will push the utilization further, and running out of VRAM will create all sorts of bottlenecks, as your system will then switch to system RAM, or worse, write to disk to fill the shortfall. If a swap file is needed, it also means a trip to your local storage method (NVMe, hopefully), further slowing things. So how much VRAM do we really need? The only game that is currently threatening to tip the scales on the 4080 Super is the Resident Evil 4 remake. When you enable all settings at Ultra and toggle the texture quality to “High (8GB)” while running the game at 8K, it will warn you that you are at 14.44/14.92 GB of Graphics Memory. No idea why it doesn’t properly detect the full 16GB, but the game looks absolutely stunning when maxed out this way, and the card performs beautifully, as you’ll see in the benchmarks below. More VRAM headroom is always appreciated, and better optimization will always be appreciated, but it’s great to see 16GB become the norm for high-end cards, at least as we close out the 4000 generation and look ahead to the 5000 series – there’s no telling what’s on that horizon.

While we don’t lean on synthetic benchmarks as they aren’t a great way to tell you how games will perform. That said, the RTX 4080 Super tends to be around 4-6% faster than the RTX 4080. Additional cores and TMUs, as well as a slightly faster base clock gives a small bump in synthetic tests like Cinebench and Blender renders. Personally, I find watching those benchmarks to be worse than watching paint dry, so let’s talk about what hardware is under the hood, and then get to the games.

Cuda Cores:

Back in 2007, NVIDIA introduced a parallel processing core unit called the CUDA, or Compute Unified Device Architecture. In the simplest of terms, these CUDA cores can process and stream graphics. The data is pushed through SMs, or Streaming Multiprocessors, which feed the CUDA cores in parallel through the cache memory. As the CUDA cores receive this mountain of data, they individually grab and process each instruction and divide it up amongst themselves. The RTX 2080 Ti, as powerful as it was, had just 4,352 cores. The RTX 3080 Ti ups the ante with a whopping 10,240 CUDA cores — just 200 shy of the behemoth 3090. The NVIDIA GeForce RTX 4090 ships with a whopping 16,384 cores. Where does the RTX 4080 Super land? 10240 – a rather large jump up from the RTX 4070 Ti’s 7168, and just a bit higher than the original 4080’s 9728 cores. It’s not apples to apples, so don’t get too invested in comparing the cores of the 3080 Ti and the 4080 Super as there is a generational difference to account for as well – these numbers don’t quite tell the whole story. We’ll circle back to this.

Since the launch of the 4000 series of cards we’ve seen time and again that these newest iterations of technologies are resulting in a staggering framerate improvement when combined with the latest DLSS technology. In some cases, NVIDIA is suggesting the framerate can double from the previous gen, and we saw that very thing in our previous reviews. The RTX 4080 Super is the top of the consumer-focused food chain. As such there is enough horsepower to push well past just 60fps at current games, but easily into the triple digits for rasterized gaming at 1440p or 4K, and in some cases, even at 8K, if you factor in new technologies like Frame Generation (and you very much should).

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture – the Tensor Core. This technology from NVIDIA had seen wider use in high performance supercomputing and data centers before finally arriving on consumer-focused cards with the latter and more powerful 2000 series cards. Now, with the RTX 40-series, we have the fourth generation of these processors. For frame of reference, the 2080 Ti had 240 second-gen Tensor cores, the 3080 Ti provided 320, with the 3090 shipping with 328. The RTX 4090 shipped with 544 Tensor Cores, the RTX 4080 has 304. The RTX 4070 Super recently shipped 224, and the RTX 4070 Ti Super ships with 264. Now the RTX 4080 Super hits the streets with 320 – a tidy bump over the 4080 predecessor. Given the complexity and advancements inside the new generation of Tensor Cores, you can’t quite do a 1:1 number battle with the previous core count. Put a pin in that thought and let’s look at what a Tensor core does.

Put simply, Tensor cores are your deep learning / AI / Neural Net processors, and they are the power behind technologies like DLSS. The RTX 4000 series of cards brings with it DLSS 3 (and now 3.5!) – a generational leap over what we saw with the 3000 series cards, and is largely responsible for the massive framerate uplift claims that NVIDIA has made. We’ve seen time and again the improvements DLSS 3 and 3.5 can make in current games, as well as Frame Generation if a user enables it and the game supports it, and that adoption is ramping quickly. In some instances we see a game go from completely unplayable with framerates in the gutter to 4K/60 or better, thanks to Frame Generation. I’ve called it magic more than once, and the results are arguably exactly that. That said, not every game supports DLSS 3.0 or 3.5, but that’s changing rapidly.

DLSS 3, 3.5, and beyond

One of the things DLSS 1 and 2 suffered from was adoption. Studios would have to go out of their way to train the neural network to import images and make decisions on what to do with the next frame. The results were fantastic, with 2.0 bringing cleaner images that could actually be better than the original source image. Still, adoption at the game level would be needed. Some companies really embraced it, and we got beautiful visuals from games like Metro: Exodus, Shadow of the Tomb Raider, Control, Deathloop, Ghostwire: Tokyo, Dying Light 2: Stay Human, Far Cry 6, and Cyberpunk 2077. Say what you will about the last game – visuals weren’t the problem. Still, without engine-level adoption, the growth would be slow. With DLSS 3, that’s precisely what they did.

DLSS 3 is a completely exclusive feature of the 4000 series cards. Prior generations of cards will undoubtedly fall back to DLSS 2.0 as the advanced cores (namely the 4th-Gen Tensor Cores and the new Optical Flow Accelerator) that are contained exclusively on 4000-series cards are needed for this fresh installment in DLSS. While that may be a bummer to hear, there is light at the end of the tunnel – DLSS 3 is now supported at the engine level by both Epic’s Unreal Engines 4 and 5, as well as Unity, covering the vast majority of all games being released in the indefinite future. I don’t know what additional work has to be done by developers, but having it available at a deeper level has greased the skids.

To date there are over 500 games that utilize DLSS 2.0, and just over 100 games already supporting DLSS 3. Another 30 or so have been announced as having support coming shortly or at shipping time, including Diablo IV, Dragon’s Dogma 2, Skull and Bones, Vampire: The Masquerade – Bloodlines 2, STALKER 2: Heart of Chornobyl, Atomic Heart, Dying: 1983 and more. Additionally, EA has recently begun supporting DLSS in their Frostbite Engine. The Madden series, Battlefield series, Need for Speed Unbound, Dead Space series, etc. will all see benefit with native support. Unity now supports DLSS, and that means improvement for games like Project Ferocious, Low-Fi, Singularity, Faded, Sand, Replaced, Hollow Cocoon, The Quinfall, etc. all see improvement. Unreal Engine 4 and 5 means support for the next Witcher game, Chrono Odyssey, Banishers: Ghosts of New Eden, Nightingale, Indika, Tekken 8, Palworld, Outcast: A New Beginning, and hundreds of other unannounced games for all of these engines can take advantage. While DLSS has had a slow adoption in the past, it’s practically a vertical climb for future support.

If you are unfamiliar with DLSS, it stands for Deep Learning Super Sampling, and it’s accomplished by what the name breakdown suggests. AI-driven deep learning computers will take a frame from a game, analyze it, and supersample (that is to say, raise the resolution) while sharpening and accelerating it. DLSS 1.0 and 2.0 relied on a technique where a frame is analyzed and the next frame is then projected, and the whole process continues to leap back and forth like this the entire time you are playing. DLSS 3 no longer needs these full frames, instead using the new Optical Multi Frame Generation for the creation of entirely new frames by using just a fraction of the original. This means it is no longer just adding more pixels, but instead constructing whole new additional AI frames in between the original frames to do it faster and cleaner.

A peek under the hood of DLSS 3 shows that the AI behind the technology is actually reconstructing ¾ of the first frame, the entirety of the second frame, and then ¾ of the third, and the entirety of the fourth, and so on. Using these four frames, alongside data from the optical flow field from the Optical Flow Accelerator, allows DLSS 3 to predict the next frame based on where any objects in the scene are, as well as where they are going. This approach generates 7/8ths of a scene using only 1/8th of the pixels, and the predictive algorithm does so in a way that is almost undetectable to the human eye. Lights, shadows, particle effects, reflections, light bounce – all of it is calculated this way, resulting in the staggering improvements I’ll be showing you in the benchmarking section of this review.

The launch of DLSS 1, 2, and 3 all carried some oddities with image quality. Odd artifacting and ghosting, shimmering around the edges, shimmering around UI elements, and other visual hiccups hit some games harder than others. More recent patches have fixed much of the visual issues, though flickering UI elements can still appear when frame generation is enabled. This mostly occurs when there is a text box or UI element that is in motion, such as a tag above a racer’s head, or text subtitles above a character. I saw this fairly extensively in Atomic Heart, though it was fixed in Cyberpunk 2077 – your mileage may vary, clearly, but it’s getting quite a bit better. Still, when you go down a high-speed elevator in Star Wars: Jedi Survivor you’ll likely see an odd trailing shadow above your character’s head. Thankfully this is becoming less and less of an issue through game patches and driver updates, though most of this relies on the developer more than NVIDIA – you gotta patch your games, folks.

There is a more recent development that I’ve seen some developers use for games that came out before the launch of the 4000 cards – hybrid DLSS. You may see DLSS 2.0 options, but with DLSS 3 Frame Generation as a toggle. Hogwarts Legacy and Hitman III come to mind, as both of them utilize both of those DLSS technologies in tandem to provide a cleaner experience. If you want to get into a bit of tinkering, you can occasionally fix issues by replacing the .dll for DLSS, though this should fall to the developer, not gamers, to fix. As I mentioned, your mileage may vary, but you’ll probably be too busy enjoying having a stupidly high framerate and resolution to notice.

Boost Clock:

The boost clock is hardly a new concept, going all the way back to the GeForce GTX 600 series, but it’s a necessary part of wringing every frame out of your card. Essentially, the base clock is the “stock” running speed of your card that you can expect at any given time no matter the circumstances. The boost clock, on the other hand, allows the speed to be adjusted dynamically by the GPU, pushing beyond this base clock if additional power is available for use. The RTX 3080 Ti had a boost clock of 1.66GHz, with a handful of 3rd party cards sporting overclocked speeds in the 1.8GHz range. The RTX 4090 ships with a boost clock of 2.52GHz, and the 4080 was not far behind it at 2.4GHz. The RTX 4080 Super looks to rectify that small shortcoming, pushing the boost clock back up to 2.55GHz.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources that intersect with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity. With RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The RT core is the engine behind your realtime lighting processing. When you hear about “shader processing power”, this is what they are talking about. Again, and for comparison, the RTX 3080 Ti was capable of 34.10 TFLOPS of light and shadow processing, with the 3090 putting in 35.58. The RTX 4090 supplies 82.58 TFLOPS. The 4080 brought 64 TFLOPS, with the 4070 reporting in at 46 RT cores providing 29 TFLOPS of FP32 shading power. The RTX 4080 Super 52.22 puts the 4070 to shame, though surprisingly comes in under the overall throughput, but it does so with a whopping 80 RT cores. It puts the Steam Hardware 3080 Ti to shame, and the generational gap between these two shading technologies is night and day (and could properly shade the night and day while it’s at it!), so it’s not a 1:1 correlation.

Benchmarks:

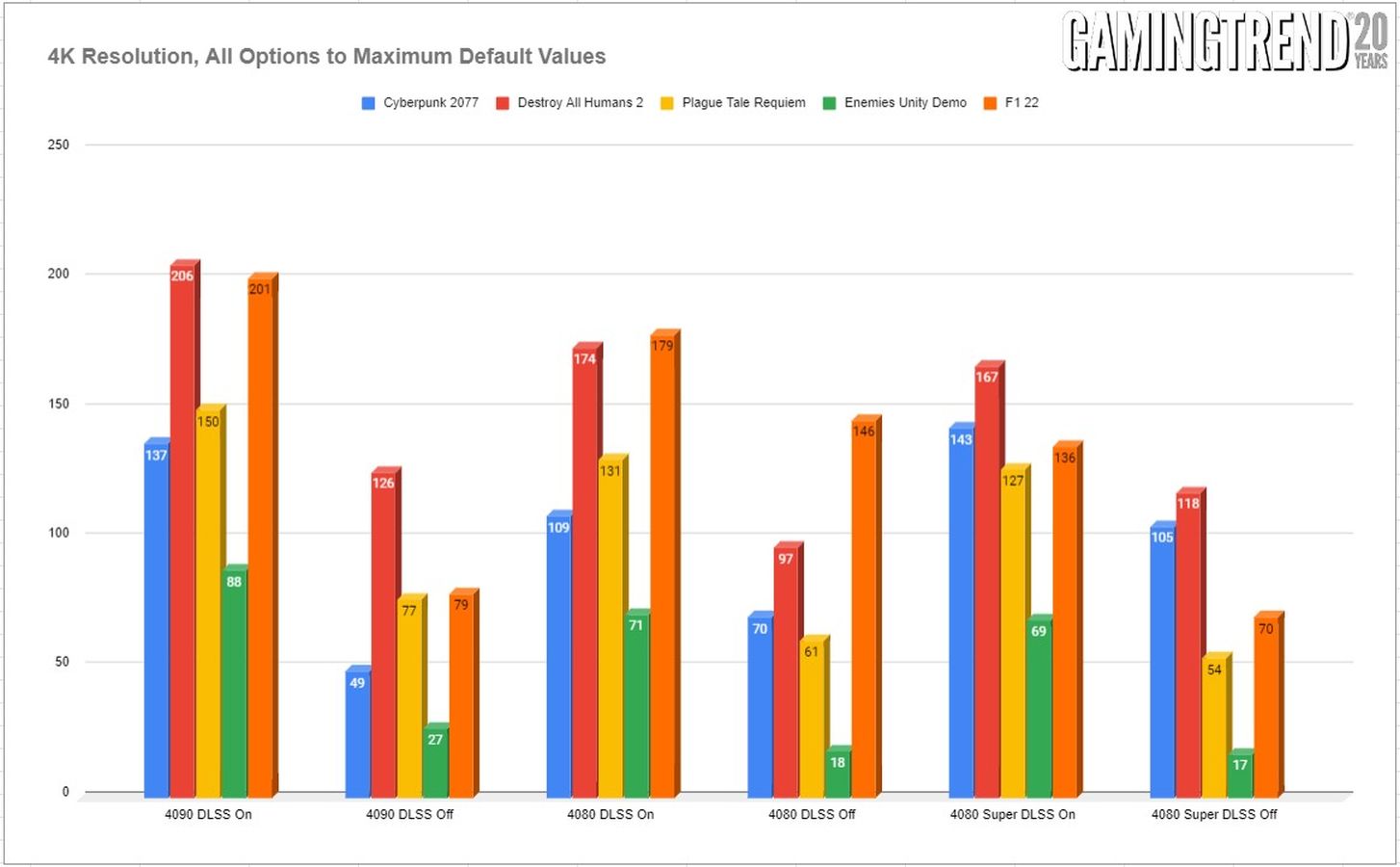

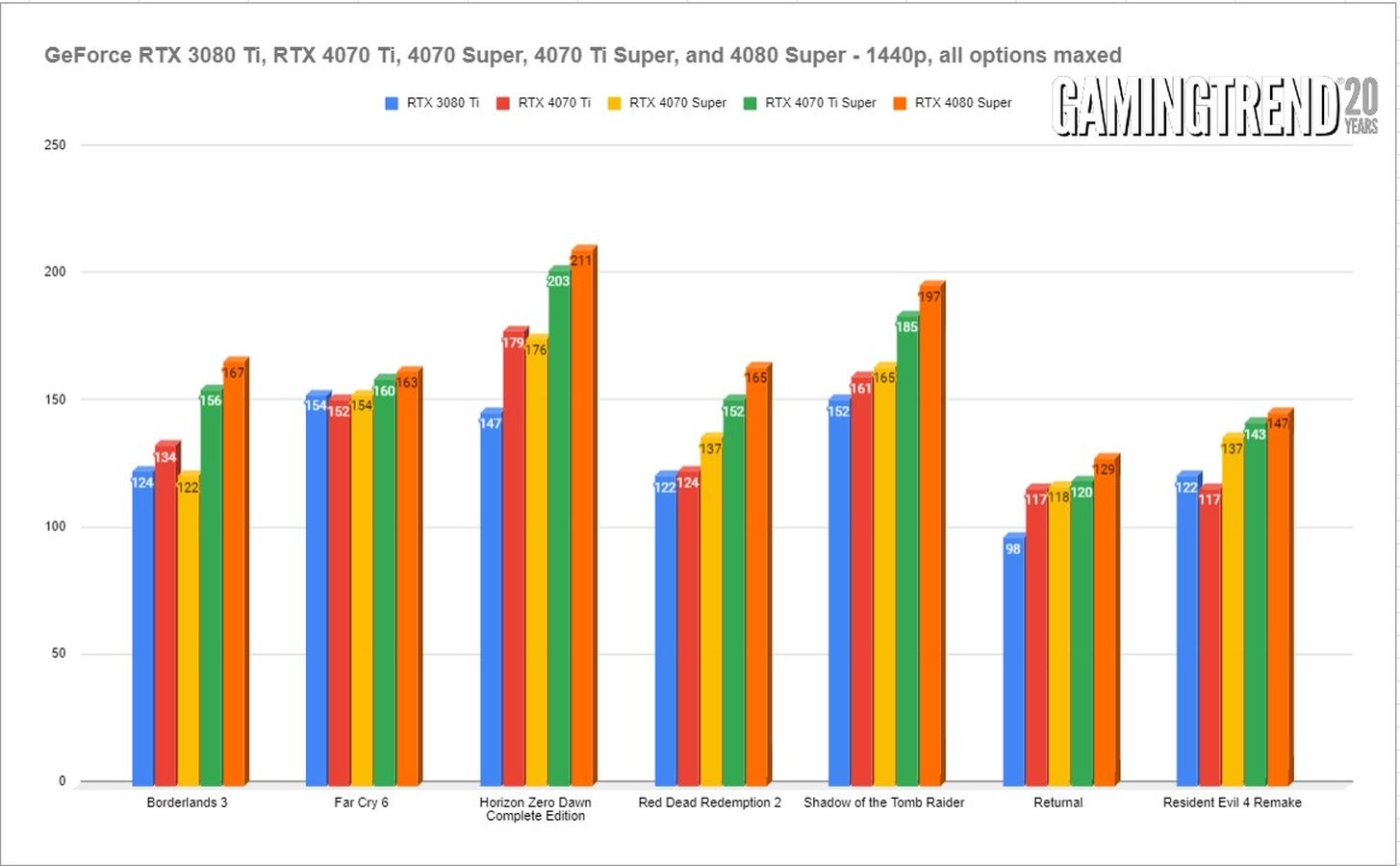

Starting off with previous generation games without DLSS 3, we see a reasonable amount of improvement in some games and a drastic improvement in others. The improvements are about what you’d expect for Ray-Traced titles, but it also performs exceedingly well in older titles. Since so many readers are still using an RTX 3080, let’s compare the consumer flagship of last generation to this midrange card from this generation, and arguably a new member of the top tier. Let’s take a look at DLSS enabled titles first.

It’s easy to see the aforementioned 3-5% bump in most titles, though we see some that see more than that. It’s also immediately clear that the effect of DLSS 3 is dramatic, and in so many cases, the card delivers at 4K, and easily. Obviously some games benefit more than others, but it’s clear that DLSS 3 is the difference between a smooth experience and framerates. At 1440p there’s enough horsepower to go around, giving the player more of a choice on how aggressive they might choose to get with the DLSS slider, or turning it off entirely if they wish. In fact, there’s likely more than enough extra horsepower to have your cake and eat it too, hitting 4K and 60+ fps. In fact, with Frame Generation, you’ll be far closer to 4K/120.

Pricing:

The RTX 4080 launched in November of 2022 at an MSRP of $1200, though you’d be hard pressed to catch it at that price at that time due to the run on crypto mining cards. Thankfully that’s over, giving us a chance to grab a card for MSRP and off the shelf. As we kick off 2024, the NVIDIA GeForce RTX 4080 Super will launch for $999, completely replacing the original card. I would keep an eye out as there may be some clearance opportunities to get the original off the shelf, but our testing bore out our initial supposition – a stronger card at a lower price. The Ada Lovelace provides advances in rendering technology (The AV1 encoder is over 40% more efficient than H.264), double the Ray Tracing power, Frame Generation, and somehow even less of a power footprint. Yes, the top tier cards costing a grand is certainly a tough pill to swallow, but given how far technologies like DLSS can stretch that dollar, and how much work the GPU is now doing, it’s a bit easier to justify.