If you head over to Steam and look at the Steam GPU chart there’s been a shift. For the longest time folks were clinging onto their GTX 1060s, with that card holding a large chunk of the market. Well, times have changed. As of March 2023, the new leader is the NVIDIA GeForce RTX 3060. Moreover, nearly 95% of folks have finally made the shift to DirectX 12 GPUs. After what has seemed like a lifetime of folks lagging behind, people are finally starting to upgrade their machines in anticipation of games like Starfield, Star Wars: Jedi Survivor, Redfall, Baldur’s Gate III, and more. As NVIDIA begins to roll out the rest of their 4000 series cards, we finally have our hands on the GeForce RTX 4070 – the latest in their mid-tier line of videocards. Could it be the right choice over the now-popular 3060, and what advantages would that jump bring? Let’s find out.

The first thing you’ll notice about the RTX 4070 is that we are back to a svelte two slot design. As I pulled out the 4090 to slot this one in, the 4070 looks almost comically small by comparison. Still sticking with the passthrough fin design, it very much resembles the flagship designs of the previous iterations of the last two generations. We’d need to look under the hood to see the differences.

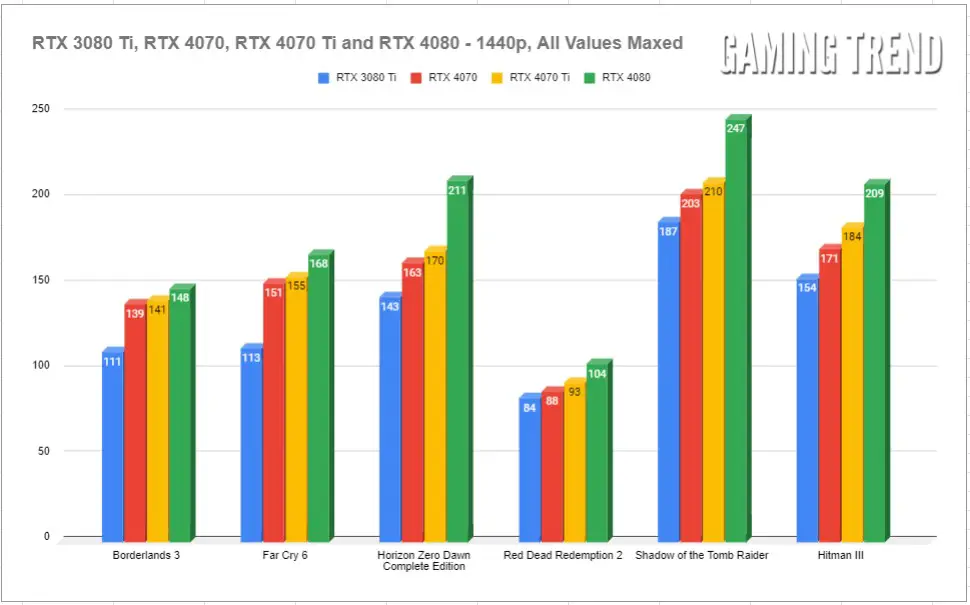

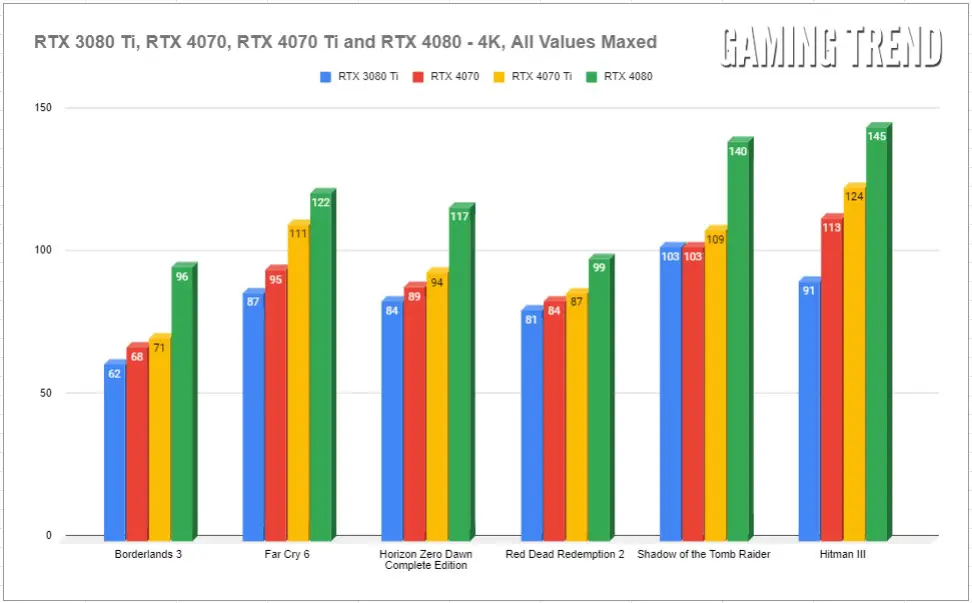

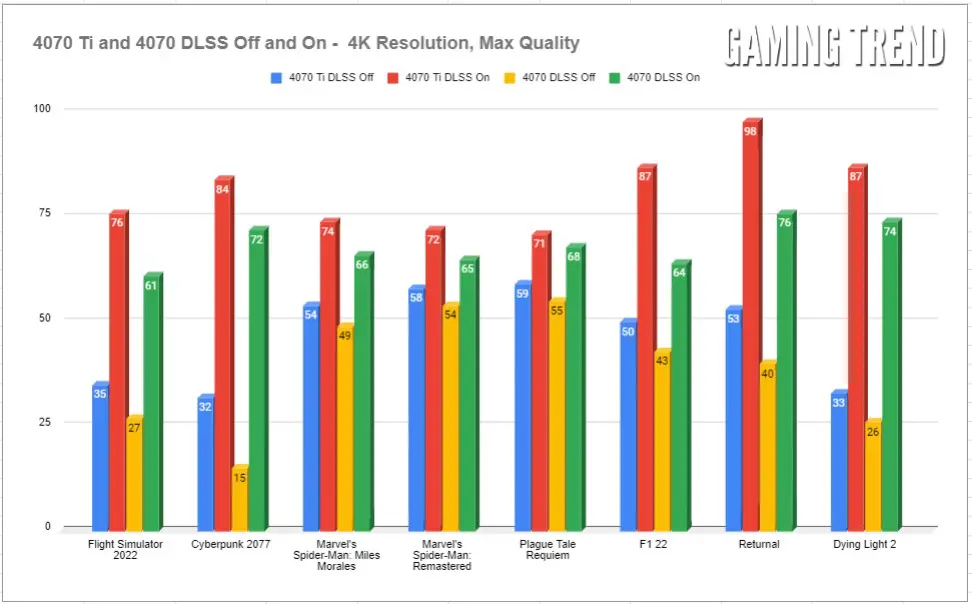

The biggest comparison folks are likely to make is the approximate delta between the RTX 4070 Ti (our review here) and the 4070. We’ll be doing precisely that, as well as comparing it to several others. It’s important to note that reviews are a point in time, and driver maturity and game patches are likely to push numbers higher. As such, focus less on the number than the delta between them. Or don’t – I’m not your dad. Either way, we’ll look at a number of both DLSS 2, DLSS 3, and Rasterized performance at 1440p as that’s the sweet spot for maximum performance. That isn’t to say that the card can’t handle 4K content, it can and does easily enough with the help of DLSS 3, but it seems clear that the RTX 4070 is aimed at 1440p with higher framerates — 2K gamers rejoice.

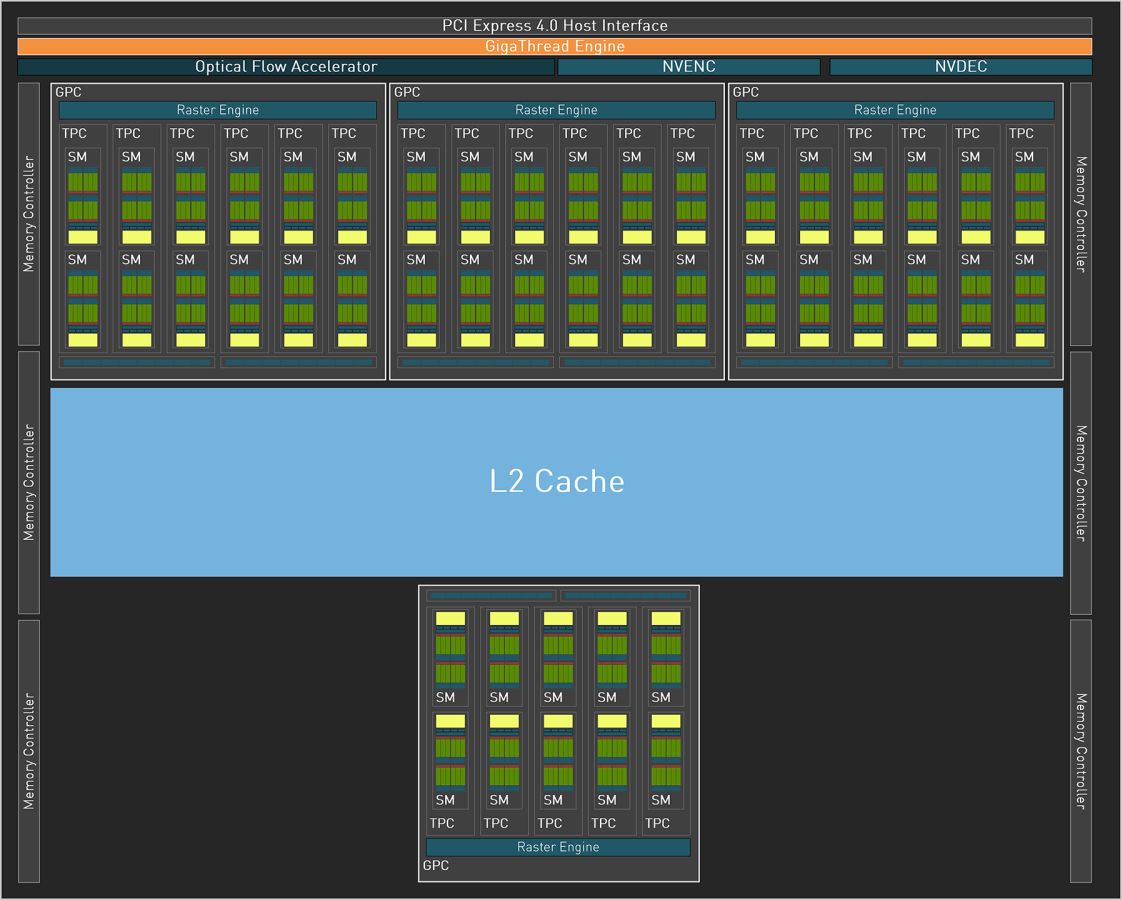

Another area where NVIDIA has invested is in the L2 cache. The L2 cache is responsible for handling cache activities for local and common data sets. This can be shader functions, a common computed vertex, a mesh, or any number of things that the GPU might want access to quickly and frequently. Given that this is a direct pipeline to the GPU itself, it will always be faster than exiting the card, crossing the bus, and hitting the memory pipeline. The L2 cache on the RTX 3070 Ti was a paltry 4MB. The 4070 arrives with a whopping 36MB. While this doesn’t sound like a lot, realize that a relatively massive increase in overall space can make a real difference in performance at runtime. The reason being is simple – when a GPU executes literally thousands of mathematical calculations, it often does so with the same instructions over and over. Having that instruction set at the ready to re-use for all of them is only ever a good thing.

There’s an emerging trend among games – a rising need for VRAM. We cited that in several reviews over the last two generations when cards shipped with less than 12GB of video memory. Naturally, increasing resolution is going to soak up quite a bit more VRAM, as will pushing levels of detail settings. Game engines can be optimized, and there are a number of techniques to help with keeping resource utilization low, though that’s not something you can rely on. Asset culling, mipmapping, garbage collection, and other techniques can help with controlling VRAM usage, but running at higher resolutions and pre-loading textures can soak it up like a sponge. As a game developer, I’m going to use every ounce of power available to me, and why shouldn’t I? There are minimum requirements, and recommended, but everything after that is gravy. But what happens when the pool runs low?

When your card runs out of VRAM, you’ll need to make use of your system RAM to fill the gap. Rather than processing complex calculations, lighting, loading textures, or whatever else within the card, instead it’ll have to strike out across the motherboard’s PCIe lane to your CPU and memory. Though PCIe 5 is reaching amazing speed, and RAM speeds are shooting ever higher, it’s still going to be slower than running everything locally. If a swap file is needed, it also means a trip to your local storage method (NVMe, hopefully), further slowing things.

One of the things we criticized previous cards for was that it felt like NVIDIA might have shorted the RAM pool a bit at 10GB. The RTX 3080 was a powerful card, but 10GB of RAM made the Ti version that much more attractive as it had another 2GB of VRAM headroom. The 4000 series of cards have been more generous with VRAM, with the 4090 having 24 GB of GDDR6X memory, the 4080 shipping with 16GB, and the 4070 Ti coming with 12GB. It seemed obvious that the RTX 4070 would slim back to 10GB, but thankfully that’s not the case. Sticking with 12GB GDDR6X, and sticking with the same 192-bit bus width as the 4070 Ti, the RTX 4070 isn’t too far off from its bigger brother. Given the price slimming, you’d expect a larger fall-off, but that’s not what we see here. Before we dig into the results, let’s take a look at the rest of what’s under the hood, and what those components do.

Cuda Cores:

Back in 2007, NVIDIA introduced a parallel processing core unit called the CUDA, or Compute Unified Device Architecture. In the simplest of terms, these CUDA cores can process and stream graphics. The data is pushed through SMs, or Streaming Multiprocessors, which feed the CUDA cores in parallel through the cache memory. As the CUDA cores receive this mountain of data, they individually grab and process each instruction and divide it up amongst themselves. The RTX 2080 Ti, as powerful as it was, had 4,352 cores. The RTX 3080 Ti ups the ante with a whopping 10,240 CUDA cores — just 200 shy of the behemoth 3090. The NVIDIA GeForce RTX 4090 ships with a whopping 16,384 cores. The GeForce RTX 4070 will arrive with 5,888 CUDA cores, providing 29 FP32 Shader-TFLOPS of power, but that doesn’t tell the whole story. We’ll circle back to this.

Since the launch of the 4000 series of cards we’ve seen time and again that this newest iteration of technologies combined are resulting in a staggering framerate improvement when combined with the latest DLSS technology. In some cases, NVIDIA is suggesting the framerate can double from the previous gen, and we saw that very thing in our previous reviews. Now, as we get into the mid-tier of cards, there is less “extra” hardware to throw around, so I was eager to see where this card would fall in the hierarchy.

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture – the Tensor Core. This technology from NVIDIA had seen wider use in high performance supercomputing and data centers before finally arriving on consumer-focused cards with the latter and more powerful 2000 series cards. Now, with the RTX 40-series, we have the fourth generation of these processors. For frame of reference, the 2080 Ti had 240 second-gen Tensor cores, the 3080 Ti provided 320, with the 3090 shipping with 328. The new 4090 ships with 544 Tensor Cores, the RTX 4080 has 304, and the RTX 4070 has 184, but once again, this doesn’t tell you the whole story, and once again, I’m asking you to put a pin in that thought. So what does a Tensor core do?

Put simply, Tensor cores are your deep learning / AI / Neural Net processors, and they are the power behind technologies like DLSS. The RTX 4090 brings with it DLSS 3, a generational leap over what we saw with the 3000 series cards, and is largely responsible for the massive framerate uplift claims that NVIDIA has made. We’ll be testing that to see how much of the improvements are a result of DLSS and how much is the raw power of the new hardware. This is important, as not every game supports DLSS, but that may be changing.

DLSS 3

One of the things DLSS 1 and 2 suffered from was adoption. Studios would have to go out of their way to train the neural network to import images and make decisions on what to do with the next frame. The results were fantastic, with 2.0 bringing cleaner images that could actually be better than the original source image. Still, adoption at the game level would be needed. Some companies really embraced it, and we got beautiful visuals from games like Metro: Exodus, Shadow of the Tomb Raider, Control, Deathloop, Ghostwire: Tokyo, Dying Light 2: Stay Human, Far Cry 6, and Cyberpunk 2077. Say what you will about the last game – visuals weren’t the problem. Still, without engine-level adoption, the growth would be slow. With DLSS 3, that’s precisely what they did.

DLSS 3 is a completely exclusive feature of the 4000 series cards. Prior generations of cards will undoubtedly fall back to DLSS 2.0 as the advanced cores (namely the 4th-Gen Tensor Cores and the new Optical Flow Accelerator) that are contained exclusively on 4000-series cards are needed for this fresh installment in DLSS. While that may be a bummer to hear, there is light at the end of the tunnel – DLSS 3 is now supported at the engine level by both Epic’s Unreal Engines 4 and 5, as well as Unity, covering the vast majority of all games being released in the indefinite future. I don’t know what additional work has to be done by developers, but having it available at a deeper level should grease the skids. Here’s a quick list of what games support DLSS 3 already:

To date there are over 400 games that utilize DLSS 2.0, and just shy of 100 games already supporting DLSS 3 that have been announced or coming in the very near future. More importantly, they are natively supporting the Frostbite Engine (The Madden series, Battlefield series, Need for Speed Unbound, the Dead Space remake, etc.), Unity (Fall Guys, Among Us, Cuphead, Genshin Impact, Pokemon Go, Ori and the Will of the Wisps, Beat Saber), and Unreal Engines 4 and 5 (the next Witcher game, Warhammer 40,000: Darktide, Hogwarts Legacy, Loopmancer, Atomic Heart, and hundreds of other unannounced games). With native engine support, we’ve already seen the list double in the last 30 days, and I can only imagine that number continuing on a vertical climb in the future.

If you are unfamiliar with DLSS, it stands for Deep Learning Super Sampling, and it’s accomplished by what the name breakdown suggests. AI-driven deep learning computers will take a frame from a game, analyze it, and supersample (that is to say, raise the resolution) while sharpening and accelerating it. DLSS 1.0 and 2.0 relied on a technique where a frame is analyzed and the next frame is then projected, and the whole process continues to leap back and forth like this the entire time you are playing. DLSS 3 no longer needs these frames, instead using the new Optical Multi Frame Generation for the creation of entirely new frames. This means it is no longer just adding more pixels, but instead constructing whole new additional AI frames in between the original frames to do it faster and cleaner.

A peek under the hood of DLSS 3 shows that the AI behind the technology is actually reconstructing ¾ of the first frame, the entirety of the second frame, and then ¾ of the third, and the entirety of the fourth, and so on. Using these four frames, alongside data from the optical flow field from the Optical Flow Accelerator, allows DLSS 3 to predict the next frame based on where any objects in the scene are, as well as where they are going. This approach generates 7/8ths of a scene using only 1/8th of the pixels, and the predictive algorithm does so in a way that is almost undetectable to the human eye. Lights, shadows, particle effects, reflections, light bounce – all of it is calculated this way, resulting in the staggering improvements I’ll be showing you in the benchmarking section of this review.

The launch of DLSS 1, 2, and 3 all carried some oddities with image quality. Odd artifacting and ghosting, shimmering around the edges, shimmering around UI elements, and other visual hiccups hit some games harder than others. More recent patches have fixed much of the visual issues, though flickering UI elements can still appear when frame generation is enabled. This mostly occurs when there is a text box or UI element that is in motion, such as a tag above a racer’s head, or text subtitles above a character. I saw this fairly extensively in Atomic Heart, though it was fixed in Cyberpunk 2077 – your mileage may vary, clearly. Thankfully this is becoming less and less of an issue through game patches and driver updates, though most of this relies on the developer more than NVIDIA – you gotta patch your games, folks.

There is a more recent development that I’ve seen some developers use for games that came out before the launch of the 4000 cards – hybrid DLSS. You may see DLSS 2.0 options, but with DLSS 3 Frame Generation as a toggle. Hogwarts Legacy and Hitman III come to mind, as both of them will utilize both of those DLSS technologies in tandem to provide a cleaner experience. If you want to get into a bit of tinkering, you can occasionally fix issues by replacing the .dll for DLSS, though this should fall to the developer, not gamers to fix. As I mentioned, your mileage may vary, but you’ll probably be busy enjoying having a stupidly high framerate and resolution to notice.

Boost Clock:

The boost clock is hardly a new concept, going all the way back to the GeForce GTX 600 series, but it’s a very necessary part of wringing every frame out of your card. Essentially, the base clock is the “stock” running speed of your card that you can expect at any given time no matter the circumstances. The boost clock, on the other hand, allows the speed to be adjusted dynamically by the GPU, pushing beyond this base clock if additional power is available for use. The RTX 3080 Ti had a boost clock of 1.66GHz, with a handful of 3rd party cards sporting overclocked speeds in the 1.8GHz range. The RTX 4090 ships with a boost clock of 2.52GHz, and the 4080 is not far behind it at 2.4GHz. The RTX 4070 has a boost clock of 2.475 GHz, giving it a bit of headroom on the compute side.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources that intersect with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity. With RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The RT core is the engine behind your realtime lighting processing. When you hear about “shader processing power”, this is what they are talking about. Again, and for comparison, the RTX 3080 Ti was capable of 34.10 TFLOPS of light and shadow processing, with the 3090 putting in 35.58. The RTX 4090? 82.58 TFLOPS. The 4080 brought 64 TFLOPS, with the 4070 reporting in at 46 RT cores providing 29 TFLOPS of FP32 shading power. If you look at the numbers, you’d think that put it just under the 3080 Ti, but the generational gap between these two shading technologies is night and day (and could properly shade the night and day while it’s at it!), so it’s not a 1:1 correlation.

Benchmarks:

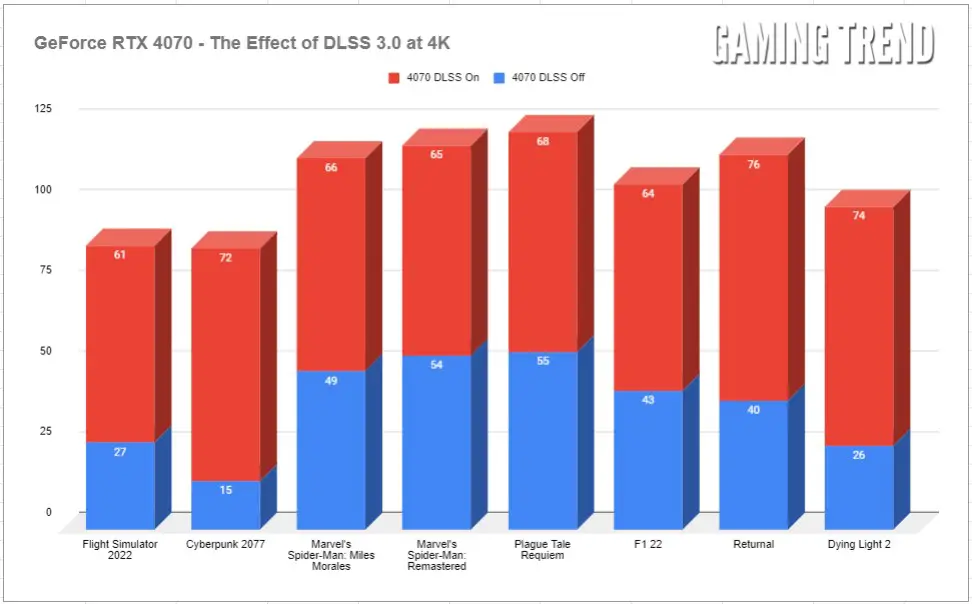

Given that this is a mid-range card, you wouldn’t expect solid 4K performance, but with DLSS 3, that’s not always the case. Sure, it’s not a crutch for bad game optimization, but it can make the difference between 60 and 90fps, and that can be the difference between a clean image and one that doesn’t feel quiet right. As such, we have benchmarked everything at 1440p and at 4K, as well as with DLSS enabled, and without. Without further ado, let’s get into it:

Starting off with previous generation games without DLSS 3, we see a reasonable amount of improvement in some games and a drastic improvement in others. Borderlands 3 and Far Cry 6 jump a significant amount at 1440p, and surprisingly, HItman III sees a solid jump at 4K. The improvements are about what you’d expect, though there is something I’ve done here you should note. I’m comparing the consumer flagship of last generation, and the consumer flagship of this generation to a midrange card. These numbers back my initial thought – the 4070 isn’t best compared to a 3070, but the 3080 Ti. Let’s take a look at DLSS enabled titles.

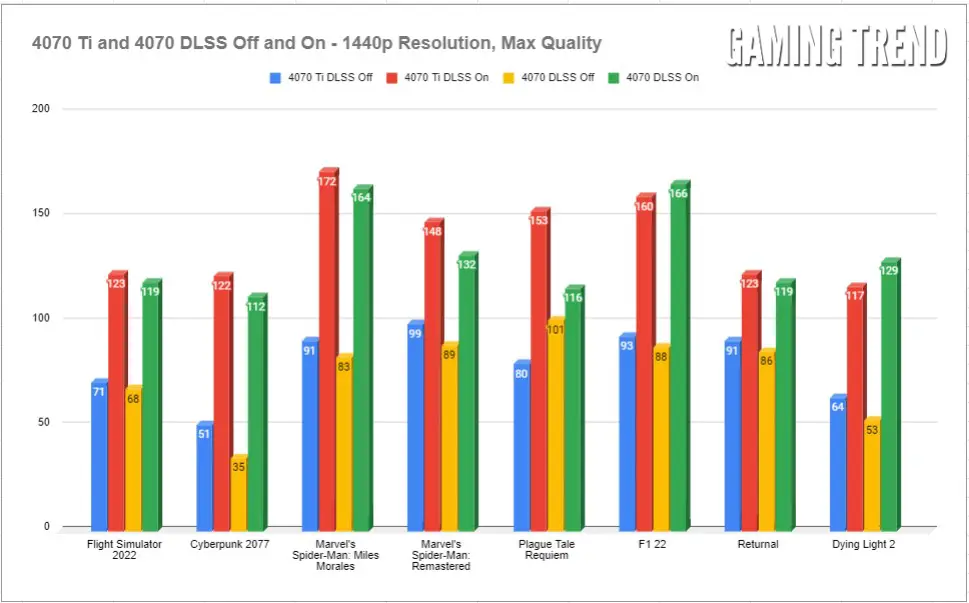

Here we have a more direct head to head against the RTX 4070 Ti – this card’s bigger (and $150 more expensive) brother. It’s immediately clear that the effect of DLSS 3 is dramatic, and we see that when a game like Dying Light 2 can go from unplayable at 4K to downright enjoyable at the same resolution with the flick of a toggle. Similarly, Cyberpunk 2077 is abysmal at that resolution without DLSS 3. Obviously some games benefit more than others, but it’s clear that DLSS 3 is the difference between a smooth 4K experience and not having one at all in many games. At 1440p there’s enough horsepower to go around, giving the player more of a choice on how aggressive they might choose to get with the DLSS slider, or turning it off entirely if they wish. For my money, I may be terrible at Microsoft’s Flight Simulator, but I’d rather play it at triple-digit framerate than mid-60s.

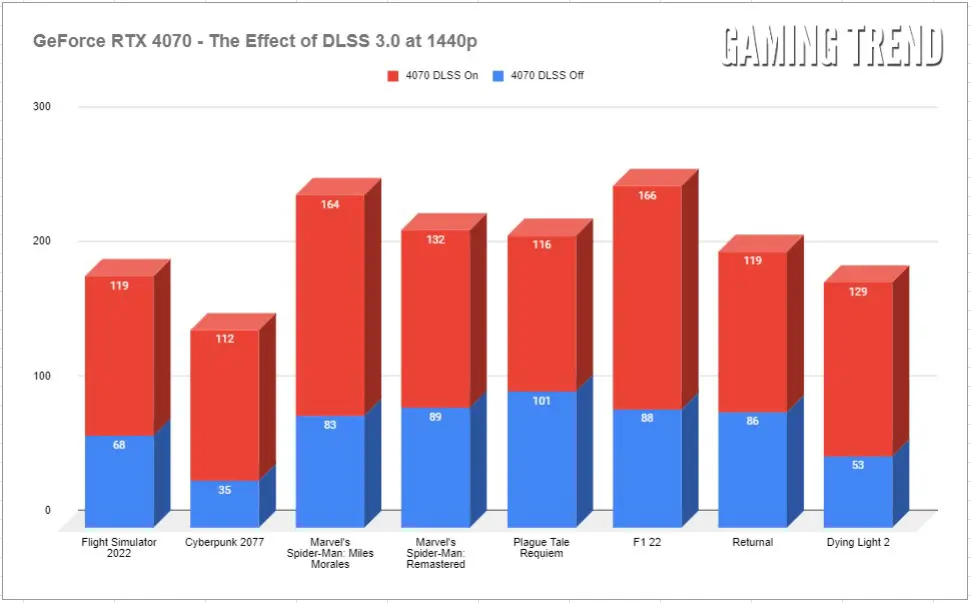

To reiterate my point around DLSS 3 I sliced the data a different way visually. A stacked graph shows just how much heavy lifting AI upsampling is doing. At the price of the odd wobble of a UI element, I’ll take it.

Pricing:

There were a lot of pre-launch rumors around pricing, and for once the good news is proving to be true. Launching on April 13th, the RTX 4070 will retail for $599. The RTX 3070 launched for $499 back in the day, and many folks felt those days were long gone. Even factoring in the quantum leap in tech, inflation and higher production costs, NVIDIA will be releasing their flagship version for $599, though partners are naturally allowed to price their variants however they see fit.

Priced against their competitors, and relative to performance, this $599 mark feels like an appropriate one. It’s competitive, should provide a long run of high-performance gaming, is a full $150 off the 4070 TI’s $749 launch price. A quick check on Amazon seems RTX 3070s for anywhere between $559 and $619 before you get into the really odd variants. Those cards don’t carry the current generation of cores, nor do they have AV1 and DLSS 3 support. For exactly the same money, you can have all of those things, and you’ll pick up a card with a warranty in the process. Sounds like a win all around to me.

NVIDIA GeForce RTX 4070

Excellent

With upcoming games like Redfall, Diablo IV, Star Wars: Jedi Survivor, Boundary, and Avatar: Frontiers of Pandora all supporting DLSS 3 at launch, and a rapidly growing catalog of games joining them, there’s a lot to be excited about in NVIDIA’s tech portfolio. The RTX 4070 seems primed to capture that mid-tier market. With a price more inline with the previous generation, and a solid improvement to numbers across the board, it could be the upgrade you are waiting for.

Pros

- Price to power ratio seems like a good value

- 12GB of GDDR6X VRAM is appreciated

- Excellent 1440p performance

- Reasonable 4K performance with DLSS enabled

Cons

- $100 premium over 3070 launch price

- Still some DLSS 3 UI artifacting