If you thought NVIDIA might be resting for the holidays, you might be surprised to hear they were hard at work preparing for the launch of a brand new card just after Christmas. In October of 2022 NVIDIA, had announced and launched the RTX 4090 (our review) – a shockingly powerful card with a premium price. It would also ship a new version of super sampling that is proving to be a game changer. They had also announced two RTX 4080s, but confusion about the two cards pushed the manufacturer to take a different approach. Instead of releasing two identically named cards, they’d split them into more familiar naming conventions – the RTX 4080, and the RTX 4070 Ti. We reviewed the RTX 4080 just recently and found that it delivered a significant amount of power, especially when paired with NVIDIA’s AI-powered tech, DLSS 3.0. We also saw that it was no slouch without DLSS, pushing more than double that of an RTX 3080 Ti in many cases. Today we take a closer look at the RTX 4070 Ti, the first card to diverge from the flagship architecture presented by the first two cards, and at a price point closer to what the average consumer will tolerate. Time to see what’s under the hood.

The Founder’s Edition versions of the RTX 4090 and 4080 are both three slot cards, and if you expected the “Ti” in the 4070 Ti to stand for “Tiny”, I’ve got news for you. We are looking at the ASUS TUF GeForce RTX 4070 Ti, and it’s also going to occupy three slots in your case. As before you’ll want to mind airflow and clearances if you intend to use your second PCIe slot.

As this is the third card to utilize the Ada Lovelace architecture, it carries with it all of the advantages that implies, including AI-driven DLSS 3.0. As that is exclusive to this chipset, it’s a valuable piece of the overall package. Let’s dig into the various aspects of the card, how they interoperate, and what sort of performance comes out the other end. Let’s get started with the biggest topic of conversation – price.

Pricing:

It’s still worth mentioning that these prices are driven by realities in the market, not as much by greed as people think. Chip manufacturer TSMC has increased pricing across the board, and since they provide chips to AMD, Intel, NVIDIA, and more, it’s very likely that what we are seeing with this price is the tip of the proverbial iceberg. While chip prices are starting to stabilize, we aren’t quite there yet.

The RTX 4090 was launched at a premium price of $1499, and the MSRP of the 4080 hitting at $1199 was a tough pill to swallow. The RTX 4070 Ti gets a bit of a haircut at $799, and to hit that price there are a number of concessions. Benchmarks will reveal the price to performance figures, but let’s look at the realities of the market at launch. Before the 4080 launched NVIDIA had two upcoming card launches to contend with – the RDNA RX7000 series from NVIDIA, and Intel’s Arc A750, the Arc A770. The latter proved to be no competition at all, though the RX7000 series has proved more formidable.

For comparison, Radeon RX 7900 XTX costs $999 with the Radeon RX 7900 XT coming in at $899 – a roughly 8-9% price hike for both. If price is your only consideration, the AMD cards are the clear winner here. Looking at performance and some specific features, however, tells the rest of the story.

Thanks to DLSS, there are now two numbers to look at – rasterized, and those improved through frame generation. The 4090 and 4080 are cards built for 4K gaming, so there’s little point in testing it at anything less. With everything set to max and running at that resolution, we saw a huge uplift for both cards. The 4090 saw improvements between 50 and 80% for most titles and outright doubling for others, when compared against the RTX 3090 Ti – the premium flagship of the last generation. Rasterized we saw between 21 and 25% uplift against that same card, and when compared against more commercially available cards like the 3080 and 3080 Ti, we saw that climb up to 40% or more raw improvement. Whether you are using DLSS or not, these cards are impressive. Looking over reviews at the time (I didn’t have access to those cards or this current generation – where are you AMD?) there seems to be a roughly 35% performance uplift, and a fairly solid 20% performance uplift in 4K. Where they struggle, unfortunately, is with realtime lighting. Given that developers are stumbling over themselves to put ray tracing into their games nowadays, that’s a real Achilles heel. In most tests, even AMDs most powerful cards struggled to maintain 30fps at 4K with ray tracing enabled – there’s a lot of work to be done here. Unfortunately this also extended to VR compatibility where I saw a number of articles and forum posts where users were struggling with crashes and stuttering in virtual reality – a recipe for nausea. AMD has a knack for fixing a lot of their hiccups post-launch with driver updates, so it’s likely that we’ll see parity over time. Still – it’s a lot to consider when you are spending this much cash.

Before you label me some sort of NVIDIA apologist, please note that I don’t make value judgments around games or hardware. I report the price, I report the market condition, and you have to make a decision on how you proceed. The RTX 3070 Ti, the equivalent card from last generation, launched at $599 – $200 less than this card. A quick check of eBay has the likely-mining-used price for that card at roughly $450, with the new price on Amazon coming in hot at $750 to $800, with outliers like EVGA at over a grand. In short, while the scalpers pricing is thankfully coming down, we aren’t out of the woods yet. As such, if you were already planning on picking up a card in the current climate, there’s little reason to not try to grab a 4000 series card over a 3000 series one, as long as you can nab it at MSRP as you’ll be paying the same amount either way. While I do feel like it could be slightly over the price threshold consumers might expect, the reality is that this isn’t going to be, and cannot be a $500 card with the tech packed inside. That said, here’s hoping the price can soften some to land in a space that fans can accept.

Enough about price, let’s talk about components, benchmarks, power, and performance.

Cuda Cores:

Back in 2007, NVIDIA introduced a parallel processing core unit called the CUDA, or Compute Unified Device Architecture. In the simplest of terms, these CUDA cores can process and stream graphics. The data is pushed through SMs, or Streaming Multiprocessors, which feed the CUDA cores in parallel through the cache memory. As the CUDA cores receive this mountain of data, they individually grab and process each instruction and divide it up amongst themselves. The RTX 2080 Ti, as powerful as it was, had 4,352 of these cores. The RTX 3080 Ti ups the ante with a whopping 10240 CUDA cores — just 200 shy of the behemoth 3090. The NVIDIA GeForce RTX 4090 ships with a whopping 16384 cores, and the RTX 4080 has 14080 of them. The 4070 Ti slims things a bit at 7680, placing it squarely between the RTX 3080 which had 8960 and the 3070 Ti at 6144.

So…who is Ada Lovelace, and why do I keep hearing her name in reference to this card? Well, you may or may not know this already, but NVIDIA uses code names for their processors, naming them after famous scientists. Kepler, Turing, Tesla, Fermi, and Maxwell are just a few of them, with Ada Lovelace being the most current, who is considered the first computer programmer. These folks have delivered some of the biggest leaps in technology mankind has ever known, and NVIDIA recognizes their contributions. It’s a cool nod, and if that sends you down a scientific rabbit hole, then mission accomplished.

The 4000 series of cards brings with them the next generation of tech for GPUs. We’ll get to Tensor and RT cores, but at its simplest, this next gen core is able to deliver faster and increased performance in three main areas – streaming multiprocessing, AI performance, and ray tracing. In fact, NVIDIA is stating that it can deliver double the performance across all three. I salivate at these kinds of claims, as they are very much things we can test and quantify.

What is a Tensor Core?

Here’s another example of “but why do I need it?” within GPU architecture – the Tensor Core. This technology from NVIDIA had seen wider use in high performance supercomputing and data centers before finally arriving on consumer-focused cards with the latter and more powerful 20X0 series cards. Now, with the RTX 40-series, we have the fourth generation of these processors. For frame of reference, the 2080 Ti had 240 second-gen Tensor cores, the 3080 Ti provided 320 compared with the 3090 shipped with 328. The 4090 ships with 544 Tensor Cores, the RTX 4080 has 440, and the RTX 4070 Ti comes with 240, again putting it between the 3080 and 3070 Ti. The difference, of course, is that there is a generational difference at play. Each Tensor Core generation roughly doubles power and efficiency. In this case, it also unlocks DLSS 3 – something exclusive to the 4000 series cards, providing significant framerate uplift for over 250 games and growing every day. So what do they do?

Put simply, Tensor cores are your deep learning / AI / Neural Net processors, and they are the power behind technologies like DLSS. The RTX 4000 series brings with it DLSS 3, a generational leap over what we saw with the 3000 series cards, and is largely responsible for the massive framerate uplift claims that NVIDIA has made. We’ll be testing that to see how much of the improvements are a result of DLSS and how much is the raw power of the new hardware. This is important, as not every game supports DLSS, but that may be changing.

DLSS 3

One of the things DLSS 1 and 2 suffered from was adoption. Studios would have to go out of their way to train the neural network to import images and make decisions on what to do with the next frame. The results were fantastic, with 2.0 bringing cleaner images that could actually be better than the original source image. Still, adoption at the game level would be needed. Some companies really embraced it, and we got beautiful visuals from games like Metro: Exodus, Shadow of the Tomb Raider, Control, Deathloop, Ghostwire: Tokyo, Dying Light 2: Stay Human, Far Cry 6, and Cyberpunk 2077. Say what you will about the last game – visuals weren’t the problem. Still, without engine-level adoption, the growth would be slow. With DLSS 3, that’s precisely what they did.

DLSS 3 is a completely exclusive feature of the 4000 series cards. Prior generations of cards will undoubtedly fall back to DLSS 2.0 as the advanced cores (namely the 4th-Gen Tensor Cores and the new Optical Flow Accelerator) that are contained exclusively on 4000-series cards are needed for this fresh installment in DLSS. While that may be a bummer to hear, there is light at the end of the tunnel – DLSS 3 is now supported at the engine level by both Epic’s Unreal Engine 4 and 5, as well as Unity, covering the vast majority of all games being released into the indefinite future. I don’t know what additional work has to be done by developers, but having it available at a deeper level should grease the skids. Here’s a quick list of what games support DLSS 3 already:

To date there are nearly 300 games that utilize DLSS 2.0, and approaching 50 games already supporting DLSS 3. More importantly, it’s natively supported in the Frostbite Engine (The Madden series, Battlefield series, Need for Speed Unbound, the Dead Space remake, etc.), Unity (Fall Guys, Among Us, Cuphead, Genshin Impact, Pokemon Go, Ori and the Will of the Wisps, Beat Saber), and Unreal Engine 4 and 5 (The next Witcher game, Warhammer 40,000: Darktide, Hogwarts Legacy, Loopmancer, Atomic Heart, and hundreds of other unannounced games). With native engine support, it’s very likely we’ll see a drastic increase in the number of titles that support the technology going forward.

If you are unfamiliar with DLSS, it stands for Deep Learning Super Sampling, and it’s accomplished by what the name breakdown suggests. AI-driven deep learning computers will take a frame from a game, analyze it, and supersample (that is to say, raise the resolution) while sharpening and accelerating it. DLSS 1.0 and 2.0 relied on a technique where a frame is analyzed and the next frame is then projected, and the whole process continues to leap back and forth like this the entire time you are playing. DLSS 3 no longer needs these frames, instead using the new Optical Multi Frame Generation for the creation of entirely new frames. This means it is no longer just adding more pixels, but instead reconstructing portions of the scene to do it faster and cleaner.

A peek under the hood of DLSS 3 shows that the AI behind the technology is actually reconstructing ¾ of the first frame, the entirety of the second frame, and then ¾ of the third, and the entirety of the fourth, and so on. Using these four frames, alongside data from the optical flow field from the Optical Flow Accelerator, allows DLSS 3 to predict the next frame based on where any objects in the scene are, as well as where they are going. This approach generates 7/8ths of a scene using only 1/8th of the pixels, and the predictive algorithm does so in a way that is almost undetectable to the human eye. Lights, shadows, particle effects, reflections, light bounce – all of it is calculated this way, resulting in the staggering improvements I’ll be showing you in the benchmarking section of this review.

It’s not all sunshine and rainbows with DLSS, though some of this is certainly early-adopter woes. Having now spent dozens of hours with DLSS 3-enabled games, I’ve begun to notice some slight disruption at the edges. Reducing or increasing the amount of adjustment being made by DLSS improves this. You’ll have to find a balance between framerate and image clarity – something that NVIDIA tries to do with the various settings of Quality, Performance, Ultra Performance and the like. One thing is for certain, however – frame generation is going to yield what can only be described as a stupidly high framerate and at high resolutions. We’ll come back to this when we get to benchmarks, but as such we’ll be showing both rasterized non-DLSS framerate and DLSS-enabled framerates in our benchmarks.

Boost Clock:

The boost clock is hardly a new concept, going all the way back to the GeForce GTX 600 series, but it’s a very necessary part of wringing every frame out of your card. Essentially, the base clock is the “stock” running speed of your card that you can expect at any given time no matter the circumstances. The boost clock, on the other hand, allows the speed to be adjusted dynamically by the GPU, pushing beyond this base clock if additional power is available for use. The RTX 3080 Ti had a boost clock of 1.66GHz, with a handful of 3rd party cards sporting overclocked speeds in the 1.8GHz range. The RTX 4090 ships with a boost clock of 2.52GHz, and the 4080 is not far behind it at 2.4GHz. The 4070 Ti pops in ahead of both at 2.61GHz – compensation for the reduction in other areas, most likely. Since launch I’ve done some tinkering with overclocking on the 4090 and 4080 and I’m surprised at both how easy it is, and how much additional headroom is available to play with. The 4070 Ti seems to have some wiggle room as well without impacting stability – something that’ll make overclockers happy. That said, now’s a good time to talk about power.

Power:

The RTX 4090 is a hungry piece of tech, and you’ll need 100 more watts of power than the RTX 3090 to power it. The 4090 has a TGP (Total Graphics Power) of 450W, and NVIDIA is recommending a power supply of 850W. The RTX 4080 isn’t too far behind at 400 with a PSU recommendation of 750W. The 4070 Ti on the other hand, is frankly tame by comparison at 285 and a 700W PSU recommended. The rumors of needing a 1500W PSU for any of these cards is highly overstated, even with a bleeding edge CPU and more.

To understand TGP and why it’s important, you should probably understand how it impacts performance. In short, TGP is the pool of power available for your GPU to utilize. The higher the workload, the more the GPU will tap that power pool. When you look at a game using your favorite benchmarking utilities (I like FrameView for the job) you might notice that your GPU is using more or less power than you might expect. This could mean your GPU is taking a break while the CPU catches up, or it’s just not using all the horsepower it has on tap. Raising the framerate doesn’t just consume more memory, but also leans more heavily into that pool. As the GPU becomes more efficient, you’ll see cards hit other limits like VRAM and CPU bottlenecks. As our testing revealed, it’s actually very difficult to max out that TGP pool unless you are gaming at 4K. It also reveals that the 4070 Ti is very much aimed at the 1440p crowd.

If you are using a PCIe Gen5 power supply (And four months after the 4090 launch there are still very few of these available, and most from companies I’ve never heard of) you’ll have a dedicated adapter from your PSU, meaning you will only be using a single power lead. Fret not if you have a Gen 4 PSU, however, you can simply use the included adapter. A four tailed whip, you can connect three 6+2 PCIe cables if you just want to power the card, but if you connect a fourth, you’ll provide the sensing and control needed for overclocking. Provided the same headroom as its predecessor exists, there should be room for overclocking, but that’s beyond the scope of this review. Undoubtedly there will be numerous people out there who feel the need to push this behemoth beyond its natural limits. My suggestion to you, dear reader, is that you check out the benchmarks in this review first. I think you’ll find that overclocking isn’t something you’ll need for a very, very long time.

Since the launch of the 4090, a great many videos have been released about the resilience of the included adapter cable. Some tech experts have bent this thing to the nth degree, causing it to malfunction. I call that level of testing pointless as you’d achieve the same endstate if you abused a PCIe Gen4 PSU cable as well. Similarly, much has been said about the limitations of the cable when manufacturers pointed out that the cable is rated to be unplugged and plugged back in about 30 times. For press folks like myself, that’s not very many – I guarantee I’ve unplugged my cards more than that in the last 90 days as I test various M.2 drives, video cards, and other devices. You as a consumer likely will do this once – when you install the card. Even so, with all of my abuse I’ve got pristine pins on my adapter after four months of hard bending. It’s also worth noting that the exact same plug/unplug recommendations were made for the previous generation, and nobody cried then. Like any other computer component, treat it kindly and it’ll likely last for a very long time. Bend it into a pretzel shape to make it fit in a shoebox and you’ll likely have problems.

Memory:

The RTX 3080, 3080 Ti, 3090, and 3090 Ti all used GDDR6X, and that’s providing the most possible memory bandwidth thanks to its vastly-expanded memory pipeline. The GeForce RTX 3090 Ti sported 24GB of memory, with a 384-bits wide memory lane. It allows for more instructions to be sent through the pipeline than traditional GDDR6 you’d find in a 3070 Ti, which is 256-bits wide. The RTX 4090 uses that same pipeline width and the same 24GB of GDDR6X. The RTX 4080 has 20GB of GDDR6X, with the same 21.2GB/s throughput as its bigger brother, but it does so with a 256-bit pipeline. The RTX 4070 Ti bumps that back to 192-bit pipeline – the same width as the RTX 3060 (The 3070 and 3070 Ti both used 256-bit). It also slims down to 12GB, but sticks with GDDR6X. While it does have a narrower pipe, the throughput numbers on this next-gen memory are impressive, with the lineup so far delivering 1,008 GB/s, 716.8 GB/s, and 504 GB/s, respectively. Once again we look to the previous generation for comparison where we see the 3080 at 936, the 3070 Ti at 760, the 3070 at 608, and the 3060 delivering 448GB/s. That places the 4070 Ti between the 3060 and 3070 on memory throughput – honestly one of the only times we’ve seen this card come short of that mark thus far. Benchmarks will reveal if that matters. As we move into the other cards in the 4000 series, it’s very likely we’ll see this continue to change as the pipeline narrows and the overall pool of memory shrinks.

Shader Execution Reordering:

One of the new bits of tech that is exclusive to the DLSS 3 pipeline is Shader Execution Reordering. If you are running a 4000 series card, you’ll be able to process shaders more effectively as they can be re-ordered and sequenced. Right now, shader objects (these calculate light and shadow values, as well as color gradations) are processed in the order received, meaning you are doing a lot of tasks out of order from when they’ll be consumed by the engine. It works, but it’s hardly efficient. With Shader Execution Reordering, these can be re-organized into a sequence that delivers them with other similar workloads. This has a net effect of up to 25% improvement in framerates and up to 3X improvement in ray tracing operations – something you’ll see in our benchmarks later on in this review.

What is an RT Core?

Arguably one of the most misunderstood aspects of the RTX series is the RT core. This core is a dedicated pipeline to the streaming multiprocessor (SM) where light rays and triangle intersections are calculated. Put simply, it’s the math unit that makes realtime lighting work and look its best. Multiple SMs and RT cores work together to interleave the instructions, processing them concurrently, allowing the processing of a multitude of light sources that intersect with the objects in the environment in multiple ways, all at the same time. In practical terms, it means a team of graphical artists and designers don’t have to “hand-place” lighting and shadows, and then adjust the scene based on light intersection and intensity. With RTX, they can simply place the light source in the scene and let the card do the work. I’m oversimplifying it, for sure, but that’s the general idea.

The RT core is the engine behind your realtime lighting processing. When you hear about “shader processing power”, this is what they are talking about. Again, and for comparison, the RTX 3080 Ti was capable of 34.10 TFLOPS of light and shadow processing, with the 3090 putting in 35.58. The RTX 4090 provides 82.58 TFLOPS across 120 cores, the 4080 gives us 67.58 TFLOPS with 110 RT cores, and the RTX 4070 Ti provides 40 TFLOPS of light and shadow processing power with its 93 RT cores. Again, given that so many developers are pushing real time lighting into their games, it’s great to see that NVIDIA hasn’t pared down the RT cores that much.

Benchmarks:

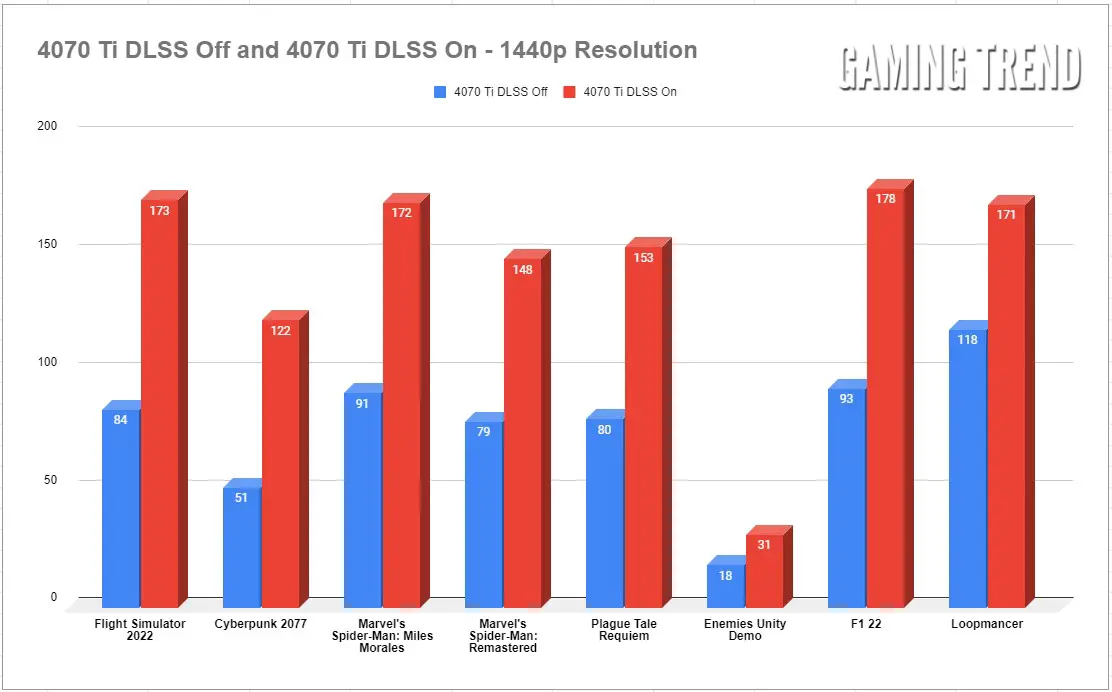

As we did on the 4090 and 4080, we’ll be measuring both DLSS-enabled scores as well as rasterized results. Primarily we’ll be focused on 1440p gaming at max settings as that’s where this card lives. I pulled a cross-section of games that utilize DLSS 3, as well as the short Enemies demo as it will absolutely brutalize any card on the market. Specifically I selected Flight Simulator 2022, Cyberpunk 2077, Marvel’s Spider-Man Remastered, Marvel’s Spider-Man: Miles Morales, A Plague Tale: Requiem, F1 2022, and Loopmancer. I also ran 3DMark’s DLSS 3 test for good measure. These were run on a machine with an Intel 12900K processor, 32GB of DDR5 at 6000MT/s on an MSI Z690 Carbon motherboard.

Other than Enemies, which isn’t honestly meant to do anything but push the card beyond its limits, I think it’s worth noting that all of these games managed to deliver at or above 60fps or better at 1440p without the benefit of DLSS in any way. Turning on DLSS using any profile setting provides a massive uplift to every single game on this list. In most cases we see a 150% improvement, with some games pushing up to nearly double. Warhammer 40,000: Darktide is still very much a work in progress, but graphically it’s no slouch. Without DLSS we see around 52 fps on average, but with DLSS 3 we are at a cool 112 fps. As DLSS 3 continues to expand and improve we should see similar uplifts for our favorite games, but where we are starting is pretty impressive already.

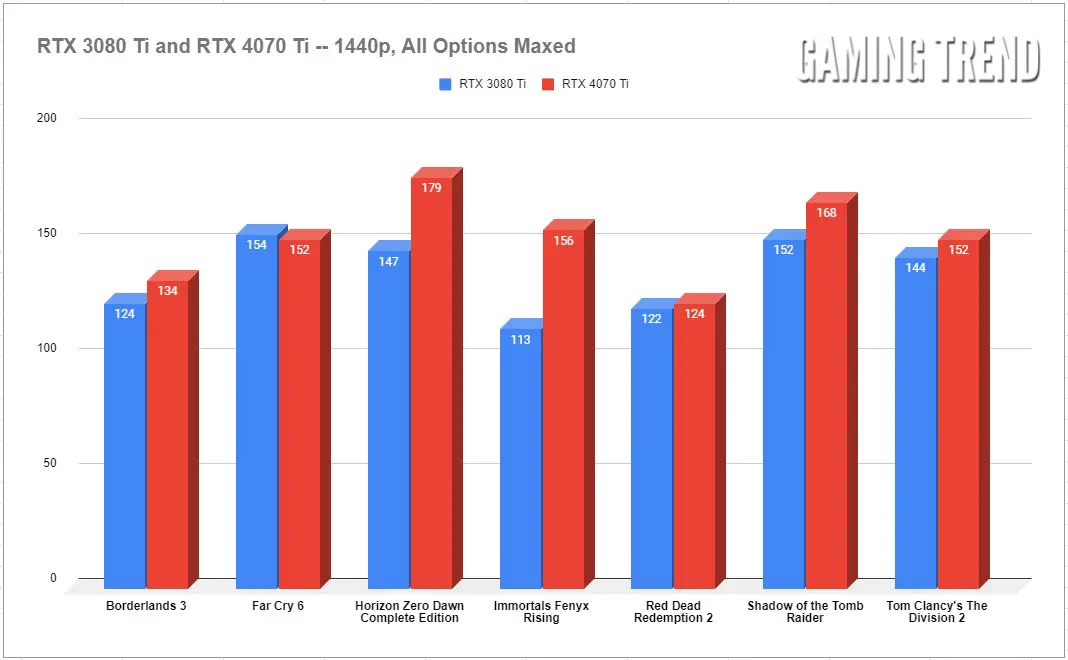

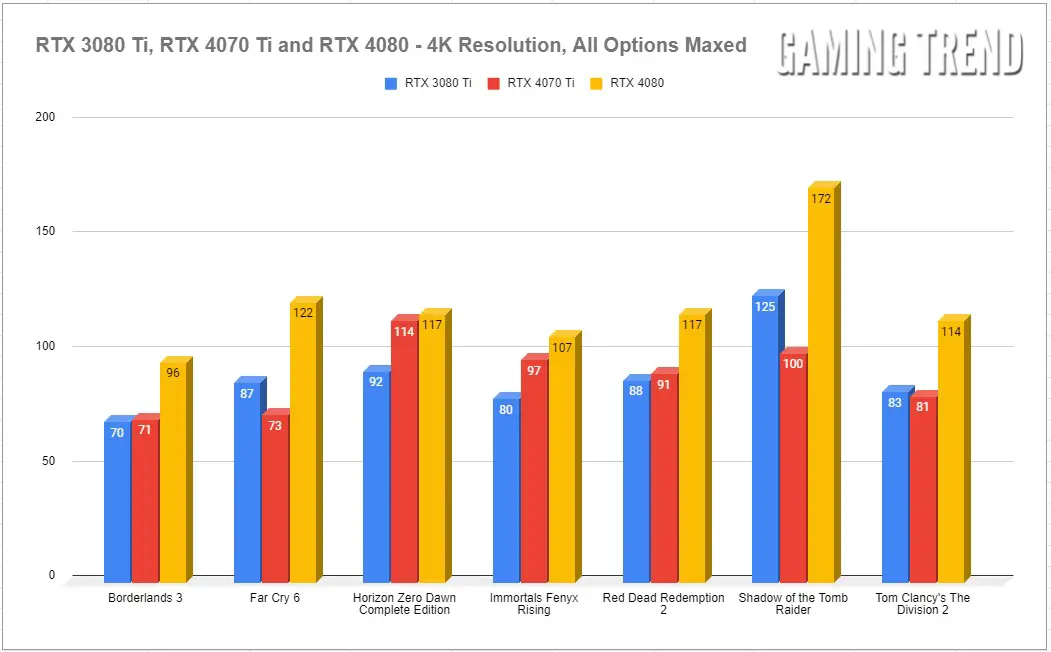

It’s not all new titles that benefit from the power of these new 4000 series cards. I also wanted to touch on a number of older titles we’ve used for benchmarks in the past to see how they fare. None of these have seen a DLSS 3 patch, so this is a solid cross-section of the past few years worth of games. These benchmarks were ran with DLSS 2.0 (without frame generation) enabled where applicable, and on the latest drivers as of the time of reading.

Armed with the two graphs above, it’s easy to see where you might be able to push resolution a little further than 1440p. The card delivers at 4K, and it does so easily. In fact, it’s comparable to an RTX 3080 Ti. I thought I might run into issues with the amount of VRAM onboard, but other than getting a warning from a game (despite having 3GB of VRAM to spare) when running at 4K, I’ve not had a single issue. If Call of Duty: Modern Warfare 2 is your jam, I have been regularly playing it at nearly 144fps at 4K at Ultra without issue. I’m still terrible at it, but the card did just fine! Frankly, I’m not sure why NVIDIA is marketing this as a 1440p card – it performs incredibly well at 4K, just with less wiggle room than its bigger brothers.

If you bring up YouTube and look up DLSS, you’ll find entirely too many channels asking “Is DLSS worth it?” with red arrows and surprised or scrunched faces. Well, just take a second and look at these graphs again. Why in the wide world of sports would you NOT enable DLSS 3? You could say you are leaving frames on the table, but honestly it’s worse than that — if you don’t enable DLSS 3, you are leaving literally hundreds of frames behind at zero additional cost. While there may be some visual wobbles every once in a while on 3.0, they are thankfully few and far between.

Sound:

The RTX 4070 Ti doesn’t have a Founder’s Edition reference design to compare it to, so it’s left to 3rd party manufacturers to come up with their own architecture. In this case, ASUS has gone with a three-fan open chassis design. There are twin heat fins piped directly to the MOSFETs and GPU with the fans laid on top to maximize airflow. ASUS has tuned the card well and even with my case open the card runs very quietly, even under load. At idle it’s effectively silent. While the fans don’t spin down entirely like the 4080, the curve is well tuned. With a quick check of my audio meter I found the card humming along at 41dB at load, just like the RTX 4080. For reference, that’s most commonly associated with “quiet library sounds”, according to IAC Acoustics. My case is roughly 2 feet away from where I sit, so I should be hearing this card spin up, but even with the case side off, I don’t.

Drivers:

I mentioned that I love the boundaries between generations, and as we reach the end of this review, I’ll tell you more about why. Yes, there’s lots of oohs and aahs for new and shiny gear, and we can plot out where we might see other cards land now that we have the 4090, 4080, and 4070 Ti’s numbers in hand, but when combined with driver updates, what these moments represent is the start of the improvements. When we benchmarked the 4090, DLSS 3 was brand new. In the month since, we’ve seen several major updates to the technology, and a solid jump in the number of games supporting the new tech for the RTX 4080 launch. Now two months later we have the full holiday lineup in the books, and a huge list of delayed titles popping up in Q1 to look forward to, with nearly all of the big names supporting DLSS 3.0. Similarly, DLSS 2.0 continues to grow. When we eventually get to the lower end cards, DLSS will be the difference between running them at high framerates, or even at all, so that adoption is far more important than just picking a GPU manufacturer to support.

We have a bit of history to look at to predict how this card will age. Using the RTX 3080 Ti’s scores at launch and again today we see scores that improved between 30 and 40%. At the start of the 4000 series we have the next generation of hardware, but also a groundswell of support for AI-driven improvements. Competition like XeSS and FSR 2.0 will continue to raise all boats, and we gamers reap the benefits. It’s an exciting time for the gaming world, and for the hardware industry. The GeForce RTX 4070 Ti looks like it’ll bridge the 1440p gap nicely, but based on our testing it’ll handle 4K just fine as well. With improvements in DLSS 3.0 it’ll also handle the games of tomorrow and beyond.

ASUS TUF Gaming GeForce RTX 4070 Ti

Excellent

The RTX 4070 Ti delivers fantastic performances on older and newer games, providing power exceeding the RTX 3080 Ti, and it does so with and without DLSS 3.0. Add in NVIDIA’s newest AI-driven upsampling and this thing is an absolute beast. While the price still feels slightly high, you can’t argue with the results.

Pros

- Stellar performance at 1440p, both rasterized and DLSS

- Ada Lovelace-specific features are game changers

- Power utilization is far lower than expected

- Ever-increasing number of DLSS 3.0 titles

- Previous-gen performance is rock solid

Cons

- MSRP feels just a little high